TL;DR

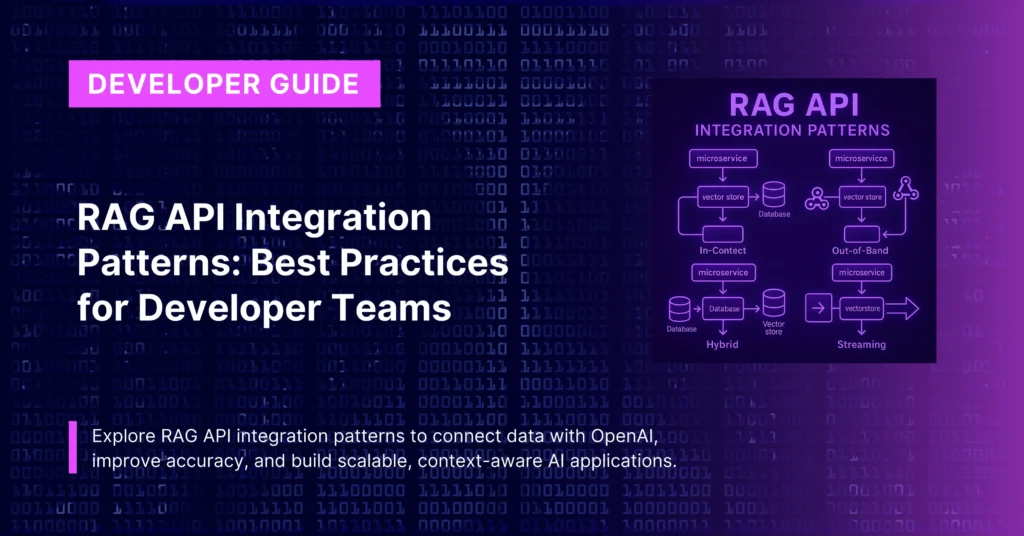

Developer teams building RAG applications face specific integration challenges that traditional AI implementations don’t address.

Based on industry data showing 73.34% of RAG implementations happening in large organizations and analysis of real production systems, this guide covers proven patterns that enterprise teams are actually using.

We’ll examine multi-tenant architectures, API proxy patterns, chunking strategies that work at scale, and the RAG API integration patterns that Microsoft, AWS, and other major platforms have validated through production deployments.

These aren’t theoretical patterns—they’re battle-tested approaches extracted from live systems serving millions of users.

The RAG integration landscape has evolved rapidly. Just two years ago, most teams were building custom solutions from scratch. Today, successful developer teams follow specific patterns that have emerged from production deployments at scale.

According to recent industry analysis, 60% of Databricks’ LLMs now use RAG, and 73.34% of RAG implementations are happening in large organizations. This concentration in enterprise environments has produced a set of integration patterns that teams can leverage rather than reinventing solutions.

The Multi-Deployment Architecture Pattern

The most successful RAG implementations support multiple deployment modes from a single codebase. This pattern addresses the reality that different teams within an organization need different integration approaches.

Real Implementation: CustomGPT’s Architecture

The CustomGPT Starter Kit demonstrates this pattern in production. Their architecture supports three deployment modes from one codebase:

- Standalone: Full Next.js app with dashboard

- Widget: Embeddable chat widget (webpack bundle)

- Iframe: Isolated iframe deployment

The key insight here is that each mode uses isolated state management and different entry points while sharing core functionality:

src/

├── app/ # Standalone mode entry

├── widget/ # Widget-specific entry points

│ ├── index.tsx # Widget mode

│ └── iframe-app.tsx # Iframe mode

├── store/ # Global stores for standalone

└── widget-stores/ # Isolated stores for widget modeThis prevents the common problem where teams build separate applications for different deployment needs, leading to maintenance overhead and feature drift.

API Proxy Pattern: The Security Standard

Enterprise data contains sensitive information that may be subject to industry regulations and company policies. Every successful enterprise RAG implementation we analyzed uses an API proxy pattern rather than exposing API keys client-side.

Microsoft’s Teams AI Implementation

Microsoft Teams enables you to build a conversational bot with RAG to create an enhanced experience to maximize productivity. Their implementation demonstrates the proxy pattern:

// Teams AI Library pattern

const app = new Application({

ai: {

planner: new ActionPlanner({

model: new OpenAIModel({

apiKey: process.env.OPENAI_API_KEY, // Server-side only

}),

}),

},

});CustomGPT’s Production Proxy Pattern

The starter kit implementation shows how this works in practice:

// src/lib/api/proxy-handler.ts

export async function handleProxyRequest(request: Request, path: string) {

const config = getConfig();

// Server-side authentication - never exposed to client

const headers = new Headers();

headers.set('Authorization', `Bearer ${config.customgpt.apiKey}`);

headers.set('Content-Type', 'application/json');

const response = await fetch(targetUrl.toString(), {

method: request.method,

headers,

body: request.method !== 'GET' ? await request.text() : undefined,

});

return response;

}This pattern provides:

- Security: API keys never reach client-side code

- CORS handling: Proxy manages cross-origin requests

- Request transformation: Centralized place to modify requests/responses

- Rate limiting: Server-side control over API usage

Chunking Strategies That Actually Work

Chunking documents into smaller parts improves retrieval efficiency but can create challenges if relevant information is split across chunks. Teams that succeed with production RAG systems use contextual chunking rather than fixed-size approaches.

Semantic Chunking Over Fixed-Size

Semantic chunking takes meaning and context into account when dividing the text. Rather than fixed-sized chunking, semantic chunking takes meaning and context into account when dividing the text. The process:

- Split text into sentences

- Generate embeddings for each sentence

- Compare semantic similarity between adjacent sentences

- Split chunks based on breakpoint threshold values

Contextual Chunk Headers

This can be as simple as prepending chunks with the document and section titles, a method sometimes known as contextual chunk headers:

Document title: Acme Inc Annual Fiscal Report

Section title: Results of Operation

"Dollars in millions, except per share data FISCAL 2024 FISCAL 2023 % CHANGE

Revenues $ 38,343 $ 37,584 0 %"Microsoft’s Chunking Approach

Skills are also used for integrated data chunking (Text Split skill) and integrated embedding. Their Azure AI Search service handles chunking automatically, but they provide configuration options:

- Preserve document structure (sections, paragraphs)

- Overlap chunks by 10-20% to maintain context

- Adjust chunk size based on content type (technical docs vs marketing content)

Hybrid Search: Combining Multiple Retrieval Methods

A hybrid search approach leverages both keyword-based search and vector search techniques, then combines the search results from both methods to provide a final search result. This addresses the weakness where vector embeddings capture semantic meaning, like lexical relationships (e.g., actor/actress are closely related), intent (e.g., positive/negative), and contextual significance but can miss exact lexical matches.

AWS’s Hybrid Approach

Amazon Kendra is a highly-accurate enterprise search service powered by machine learning. It provides an optimized Kendra Retrieve API that you can use with Amazon Kendra’s high-accuracy semantic ranker as an enterprise retriever for your RAG workflows. Their implementation:

- Semantic search for concept matching

- Keyword search for exact term matching

- Machine learning-based ranking to combine results

- Filter responses based on user permissions

Implementation Pattern

# Hybrid search implementation

def hybrid_search(query: str, top_k: int = 10):

# Get semantic results

semantic_results = vector_search(query, top_k * 2)

# Get keyword results

keyword_results = keyword_search(query, top_k * 2)

# Combine and rerank

combined_results = combine_results(semantic_results, keyword_results)

return rerank(combined_results, query)[:top_k]Multi-Tenant Architecture Patterns

Enterprise RAG deployments typically serve multiple teams or customers from the same infrastructure. Logically separate your data into isolated containers for secure multi-tenancy and focused retrieval, enhancing application relevance and data privacy.

Tenant Isolation Strategies

- Database-level isolation: Separate vector databases per tenant

- Index-level isolation: Separate search indexes with tenant filtering

- Query-time filtering: Single index with tenant metadata filtering

Microsoft’s Multi-Tenant Approach

Register a named data source with the planner and specify it in the prompt’s config.json file to augment the prompt. Their Teams AI library supports:

// Multi-tenant data source registration

planner.prompts.addDataSource(new VectraDataSource({

name: `tenant-${tenantId}-data`,

apiKey: process.env.OPENAI_API_KEY,

indexFolder: path.join(__dirname, `../indexes/${tenantId}`),

}));Query Routing Pattern

Query routing proves advantageous when dealing with multiple indexes, directing queries to the most relevant index for efficient retrieval. Production systems implement:

// Route queries based on tenant and content type

async function routeQuery(query, tenantId, contentType) {

const indexMap = {

'technical': `${tenantId}-tech-docs`,

'marketing': `${tenantId}-marketing`,

'support': `${tenantId}-support-kb`

};

const targetIndex = indexMap[contentType] || `${tenantId}-general`;

return searchIndex(targetIndex, query);

}Real-Time Data Integration Patterns

Dynamic data loading ensures that RAG systems operate with the latest information, preventing outdated data from affecting response accuracy. Enterprise teams handle this through several patterns:

Event-Driven Updates

// Webhook-based content updates

app.post('/webhook/content-update', async (req, res) => {

const { documentId, action } = req.body;

switch(action) {

case 'created':

case 'updated':

await reindexDocument(documentId);

await invalidateCache(documentId);

break;

case 'deleted':

await removeFromIndex(documentId);

break;

}

res.status(200).json({ processed: true });

});Scheduled Synchronization

You can ingest your knowledge documents to Azure AI Search Service and create a vector index with Azure OpenAI on your data. Production systems often combine:

- Real-time updates for critical content

- Scheduled batch processing for bulk updates

- Incremental synchronization to minimize processing overhead

Error Handling and Resilience Patterns

Develop comprehensive error handling mechanisms with automated retries to ensure data integrity and processing continuity. Production RAG systems implement multiple layers of resilience.

Circuit Breaker Pattern for RAG

class RAGCircuitBreaker {

constructor(threshold = 5, timeout = 60000) {

this.failureCount = 0;

this.threshold = threshold;

this.timeout = timeout;

this.state = 'CLOSED'; // CLOSED, OPEN, HALF_OPEN

}

async execute(operation) {

if (this.state === 'OPEN') {

if (Date.now() - this.lastFailTime > this.timeout) {

this.state = 'HALF_OPEN';

} else {

throw new Error('Circuit breaker is OPEN');

}

}

try {

const result = await operation();

this.onSuccess();

return result;

} catch (error) {

this.onFailure();

throw error;

}

}

}Graceful Degradation

async function searchWithFallback(query, agentId) {

try {

// Primary: Vector search

return await vectorSearch(query, agentId);

} catch (error) {

console.warn('Vector search failed, trying keyword search');

try {

// Fallback: Keyword search

return await keywordSearch(query, agentId);

} catch (fallbackError) {

// Final fallback: Generic response

return {

response: "I'm having trouble accessing the knowledge base right now. Please try again later.",

sources: []

};

}

}

}Monitoring and Observability Patterns

Real-time monitoring is essential to observe the performance, behavior, and overall health of your applications in a production environment. Successful teams implement comprehensive observability from day one.

Key Metrics to Track

Monitor usage patterns and performance metrics with dashboards. Track the retrieved sources and keep an audit log of which sources are used:

- Retrieval Quality: Precision, recall, and relevance scores

- Response Times: End-to-end latency including retrieval and generation

- User Satisfaction: Thumbs up/down, follow-up questions, session abandonment

- Cost Metrics: Token usage, API calls, infrastructure costs

- Error Rates: Failed retrievals, generation errors, timeout rates

Implementation Example

// Comprehensive RAG metrics tracking

class RAGMetricsTracker {

async trackQuery(query, response, metadata) {

const metrics = {

timestamp: Date.now(),

queryLength: query.length,

responseTime: metadata.responseTime,

retrievalCount: metadata.sources?.length || 0,

tokensUsed: metadata.tokensUsed,

userFeedback: null, // Updated later

sessionId: metadata.sessionId,

agentId: metadata.agentId

};

await this.logMetrics(metrics);

await this.updateDashboard(metrics);

}

async trackUserFeedback(sessionId, queryId, feedback) {

await this.updateMetrics(queryId, { userFeedback: feedback });

// Trigger retraining if negative feedback threshold reached

if (await this.shouldRetrain(sessionId)) {

await this.triggerRetrainingPipeline();

}

}

}Team Collaboration Patterns

Developer teams working on RAG systems face unique collaboration challenges. Based on analysis of successful implementations:

API-First Development

Teams that succeed establish clear API contracts early:

// Shared types across frontend/backend teams

interface RAGQueryRequest {

query: string;

agentId: string;

conversationId?: string;

maxResults?: number;

temperature?: number;

}

interface RAGQueryResponse {

response: string;

sources: Citation[];

confidence: number;

processingTime: number;

}Environment Parity with RAG-Specific Considerations

While striving for environment parity, there are RAG-specific differences to consider: Knowledge Base: Production uses the full, live knowledge base, while staging may use a representative subset.

Production teams manage this through:

- Staging environments: Subset of production data for testing

- Development environments: Synthetic or anonymized data

- Testing environments: Controlled datasets for automated testing

Documentation Standards

Successful teams document:

- Data source schemas and update frequencies

- Chunking strategies and rationale

- Retrieval performance benchmarks

- Integration endpoints and rate limits

Performance Optimization Patterns

The Vector Storage Layer utilizes Amazon OpenSearch Serverless as the underlying vector database, providing automatic scaling and high availability without the operational overhead. Teams optimize performance through several proven patterns:

Caching Strategies

// Multi-level caching for RAG systems

class RAGCache {

constructor() {

this.embedCache = new Map(); // Query embeddings

this.resultCache = new Map(); // Search results

this.responseCache = new Map(); // Generated responses

}

async getCachedResponse(query, agentId) {

// Check response cache first

const responseKey = `${agentId}:${this.hashQuery(query)}`;

if (this.responseCache.has(responseKey)) {

return this.responseCache.get(responseKey);

}

// Check result cache

const resultKey = `search:${responseKey}`;

if (this.resultCache.has(resultKey)) {

const cachedResults = this.resultCache.get(resultKey);

return await this.generateWithCachedResults(query, cachedResults);

}

return null; // Cache miss

}

}Connection Pooling and Load Balancing

Deploy vector databases across multiple geographical regions to reduce latency and improve availability:

// Geographic load balancing for RAG queries

class RAGLoadBalancer {

constructor() {

this.regions = {

'us-east': { endpoint: 'us-east.rag.api', latency: 0 },

'eu-west': { endpoint: 'eu-west.rag.api', latency: 0 },

'asia-pacific': { endpoint: 'asia.rag.api', latency: 0 }

};

}

async routeQuery(query, userLocation) {

const optimalRegion = this.selectRegion(userLocation);

return await this.queryRegion(optimalRegion, query);

}

}Integration Testing Strategies

RAG systems require specialized testing approaches that traditional API testing doesn’t cover:

Retrieval Quality Testing

// Automated retrieval quality tests

describe('RAG Retrieval Quality', () => {

test('should retrieve relevant documents for product questions', async () => {

const query = 'How do I reset my password?';

const results = await ragSystem.retrieve(query);

expect(results).toHaveLength(5);

expect(results[0].relevanceScore).toBeGreaterThan(0.8);

expect(results[0].source).toContain('authentication');

});

test('should handle multi-language queries', async () => {

const spanishQuery = '¿Cómo restablezco mi contraseña?';

const results = await ragSystem.retrieve(spanishQuery);

expect(results).toHaveLength(5);

expect(results[0].relevanceScore).toBeGreaterThan(0.7);

});

});End-to-End Conversation Testing

// Conversation flow testing

test('should maintain context across conversation turns', async () => {

const conversation = await ragSystem.startConversation();

const response1 = await conversation.ask('What is your return policy?');

expect(response1.sources).toContain('return-policy.md');

const response2 = await conversation.ask('What about international returns?');

expect(response2.response).toContain('international');

expect(response2.sources).toContain('return-policy.md');

});Frequently Asked Questions

How do we handle conflicting information across different data sources in our RAG system?

Track the retrieved sources and keep an audit log of which sources are used. Evaluate user satisfaction and, for advanced teams, implement specific accuracy measures for generative AI. Teams handle conflicts through source prioritization hierarchies, timestamp-based freshness scoring, and explicit conflict detection in responses. Some implementations show multiple perspectives with source attribution rather than attempting to resolve conflicts automatically.

What’s the most effective way to handle multi-tenant RAG deployments without compromising performance?

The most successful pattern is index-level isolation with shared infrastructure. Logically separate your data into isolated containers for secure multi-tenancy and focused retrieval, enhancing application relevance and data privacy. This provides tenant isolation while allowing resource sharing. Teams typically use tenant prefixes in vector databases and implement query-time filtering rather than completely separate instances.

How should development teams structure their RAG testing pipeline differently from traditional API testing?

RAG systems require semantic testing in addition to functional testing. Teams that succeed implement three testing layers: retrieval quality tests (measuring relevance and recall), generation quality tests (measuring accuracy and coherence), and end-to-end conversation tests (measuring context retention). Unlike traditional APIs that have deterministic outputs, RAG systems require statistical evaluation methods and human evaluation loops.

What are the most common performance bottlenecks in production RAG systems and how do teams address them?

Optimizing embedding model deployment is crucial for maintaining low latency in the retrieval process. The main bottlenecks are: embedding generation latency (solved through GPU deployment and model optimization), vector search time (addressed through HNSW indexing and result caching), and LLM generation latency (managed through streaming responses and connection pooling). Teams typically see the biggest performance gains from implementing multi-level caching strategies.

How do enterprise teams manage the continuous learning and improvement of their RAG systems?

RAG system integration with feedback loops adapts over time, learning from user interactions to enhance response quality and accuracy. Successful teams implement automated feedback collection through thumbs up/down ratings, implicit feedback through user behavior tracking, and periodic human evaluation. The key is creating systematic processes for incorporating feedback into retrieval optimization and knowledge base improvements rather than ad-hoc updates.

What’s the recommended approach for handling real-time data updates in production RAG systems?

Dynamic data loading ensures that RAG systems operate with the latest information, preventing outdated data from affecting response accuracy. Teams use event-driven architectures with webhooks for critical updates, scheduled batch processing for bulk changes, and incremental indexing to minimize performance impact. The pattern that works best combines immediate updates for high-priority content with batched processing for bulk updates.

How should teams approach the trade-off between retrieval accuracy and system performance?

The optimal approach depends on use case requirements, but successful teams typically implement tiered retrieval strategies. A hybrid search approach leverages both keyword-based search and vector search techniques, then combines the search results from both methods to provide a final search result. Teams use fast approximate search for initial filtering followed by more expensive reranking for final results, allowing them to maintain performance while preserving accuracy where it matters most.

What integration patterns work best for teams already using traditional LLM APIs who want to add RAG capabilities?

The proxy pattern provides the smoothest migration path. Teams can maintain existing client code while adding retrieval augmentation server-side. RAG extends the already powerful capabilities of LLMs to specific domains or an organization’s internal knowledge base, all without the need to retrain the model. The CustomGPT Starter Kit demonstrates this pattern, where existing OpenAI API calls can be enhanced with retrieval capabilities without changing client implementations.

How do teams effectively measure ROI and success metrics for their RAG implementations?

Monitor usage patterns and performance metrics with dashboards. Track the retrieved sources and keep an audit log of which sources are used. Evaluate user satisfaction and, for advanced teams, implement specific accuracy measures for generative AI. Teams measure success through reduced support ticket volume, improved first-contact resolution rates, decreased time-to-information for employees, and user satisfaction scores. The most effective approach combines quantitative metrics (response times, accuracy rates) with qualitative feedback (user interviews, support team feedback) to create a comprehensive view of system value.

For more RAG API related information:

- CustomGPT.ai’s open-source UI starter kit (custom chat screens, embeddable chat window and floating chatbot on website) with 9 social AI integration bots and its related setup tutorials.

- Find our API sample usage code snippets here.

- Our RAG API’s Postman hosted collection – test the APIs on postman with just 1 click.

- Our Developer API documentation.

- API explainer videos on YouTube and a dev focused playlist.

- Join our bi-weekly developer office hours and our past recordings of the Dev Office Hours.

P.s – Our API endpoints are OpenAI compatible, just replace the API key and endpoint and any OpenAI compatible project works with your RAG data. Find more here.

Wanna try to do something with our Hosted MCPs? Check out the docs for the same.

Priyansh is Developer Relations Advocate who loves technology, writer about them, creates deeply researched content about them.