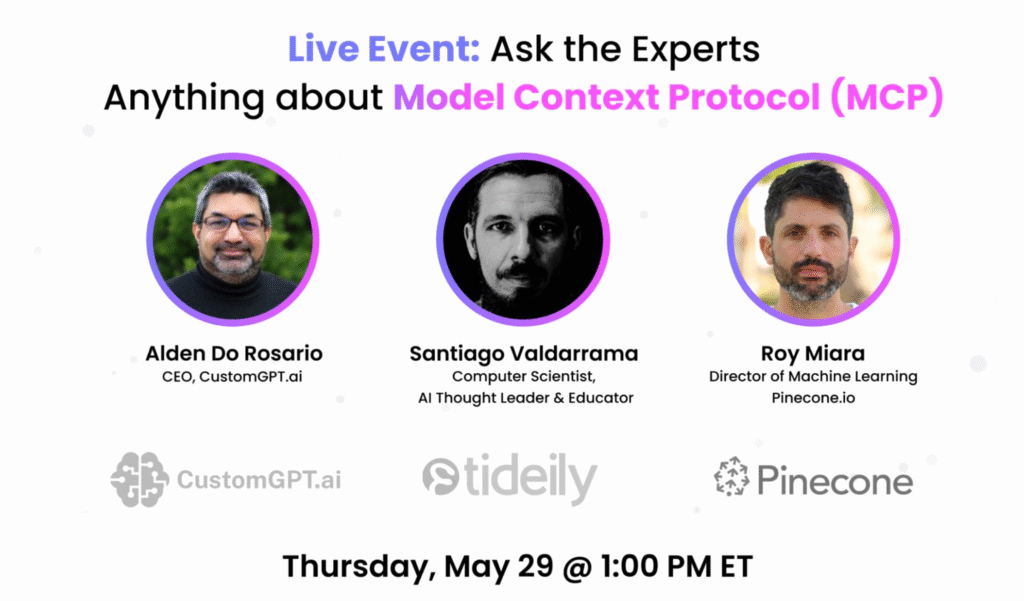

Last month (on 29th May) we hosted a live AMA on the Model Context Protocol (MCP), fielding over 40 insightful questions from engineers, architects, and AI enthusiasts around the world.

We couldn’t answer every query in real time, so below is a detailed write-up—organized by theme—with answers from our panelists Santiago, Alden, and Roy.

Get the MCP AMA Video Recording here.

Whether you’re evaluating MCP for your next project or just curious about how it all works under the hood, read on for best practices, gotchas, and real-world patterns.

Get in touch with speakers from here.

1. Fundamentals & When to Use MCP

Aaron Herholdt asked:

What are the key differences between a MCP and an API?

Great question, Aaron! An API is just a contract you agree to—endpoints, parameters and responses. MCP is a protocol built on top of APIs, standardizing how models discover available functions, invoke them, stream data back, and manage errors and version compatibility.

Think of MCP as the “rules of the road” for any model-to-tool integration, so you don’t have to reinvent the wheel for each new service.

P.S. asked:

Who needs MCP, any use cases Santiago? When should it be avoided?

MCP is good when you have multiple tools, internal databases, third-party services, even different LLM vendors, and you want one uniform way to call them.

It’s perfect for complex automation, ChatOps, RAG pipelines, multi-model workflows, etc. You can skip MCP if your setup is tiny (one model, one service) and you don’t foresee growing; then a simple function-call wrapper might be enough.

Ashish Bhatia observed:

MCP is not AGI, MCP is just more convenient Function Calling with a standard protocol.

Roy Answered: Exactly, Ashish. MCP isn’t magic intelligence – it’s a convention that makes function calling consistent across clients and services. AGI worries aside, it’s about developer ergonomics and reliability.

MCP is indeed not AGI, nor trying to be but it also goes beyond ‘standard’ Function Calling. MCP adds beyond the convention and adds discovery and server side resources, also, It enables both Server → Client but also Client → Server, this actually makes a big difference that goes beyond ‘convention’.

Jason Purdy asked:

Why isn’t this just a fancy API with better documentation?

Roy Answered: Because documentation alone doesn’t catch breaking changes or enforce schema validity.,, With MCP, the client dynamically discovers and downloads the schema at runtime and can negotiate versions, so you avoid “it worked yesterday but broke today” surprises. Rest, refer to the previous answer as it answers on those similar lines.

Anonymous asked:

Can custom MCP clients be built other than the usual providers or tools we saw mentioned in the earlier slides? How is MCP server endpoint different from any REST API based endpoint and can we now substitute REST based API endpoint with MCP endpoint directly where the use case of text based outputs?

Yes, anyone can write an MCP client or server (think fastMCP). The MCP endpoint speaks JSON-RPC style messages with standardized envelopes, not just plain REST. You can swap in an MCP endpoint wherever you need function calling—your existing REST API can simply add the MCP layer to expose its actions in a consistent protocol.

Think MCP as REST API but for LLMs.

Che Guan asked:

How does MCP compare to A2A and AG-UI?

MCP is about model-to-tool calls; A2A (agent-to-agent) is about services calling each other directly, and AG-UI is about human-agent interfaces. They overlap—an agent might use MCP under the hood to talk to a tool, and then the result surfaces in AG-UI—but each addresses a different slice of the workflow.

Muktesh Mishra noted:

A2A and MCP are complementary. While MCP deals with determinism like tool calls etc., A2A is useful when the final goal of an agent keeps changing.

Spot on. Use MCP when you need strong schemas and deterministic tool invocations; use A2A for more dynamic, peer-to-peer agent coordination.

If you have read this far, you might be interested in knowing about what CustomGPT.ai is doing on the MCP side of things.

- View our MCP Docs.

- No-Code MCP Server w/ your data

Hain-Lee Hsueh asked:

Are there compelling use cases/examples of using MCP for internal tools or internal AI applications?

Absolutely. We’ve seen teams wrap everything from Kubernetes commands to Slack notifications as MCP functions—so you can say “roll back deployment” or “summarize last week’s alerts” in natural language, and the LLM calls the right tool. It’s a fast path to ChatOps without custom glue each time.

2. Architecture of MCP & Tool Management

Roshan Sridhar asked:

Let’s say I connect to GitHub or Stripe, and it has multiple tools for multiple sub-tasks. Do I have to connect one tool at a time or do I get access to all their tools?

You register each sub-tool’s schema in your MCP registry up front (github:createIssue, stripe:createCharge, etc.). The client pulls the entire catalog at startup and then calls whichever one it needs—no per-call setup required.

Adam Claassens asked:

What did you use to build the MCP Server in the Demo?

I used Node.js with TypeScript, layered on a JSON-RPC 2.0 library and Express for HTTP transport. It’s about 300 lines of glue once you have a schema registry and a message router.

Fauze Polpeta asked:

Does that mean that service providers (GitHub, Stripe, etc.) will be the ones responsible to provision their MCP server/API?

Ideally, yes—each provider publishes its own MCP registry. You can also run a proxy that aggregates multiple registries if you need to centralize access within your organization.

Francisco Mardones asked:

If you have multiple MCP servers that provide similar tools (in this case, routing), how do you make sure that Claude uses the right server/tool?

Your registry can tag each tool with metadata (region, SLA, owner) and you can instruct the client to prefer certain tags. You could also implement a simple load-balancer or routing logic in the MCP layer that picks the best server for each call.

Pratik Kundnani asked:

Why do we need resources in MCP if we can configure a tool to return what resources return?

Resources in MCP are just another kind of tool schema; they let you expose data endpoints (like file listings or DB queries) as first-class members of the protocol. It keeps discovery and invocation consistent, rather than mixing ad-hoc REST calls with function calls.

Roshan Sridhar (again) asked:

Why do we need separate development MCP and assistant MCP? Can one do everything?

You can run one MCP everywhere, but it’s common to have a “dev” environment pointing at sandbox credentials and a “prod” environment with real data. The protocol is identical; you just switch endpoints and keys.

Jonathan McClure asked:

How do you best think about a multi-agent system that uses a controller agent and specialized sub-agents – I’m trying to figure out when to use MCP vs A2A protocols.

Use MCP for any call where you need a strict schema and deterministic inputs/outputs (e.g. “translate this text” or “open a ticket”). Use A2A when agents need to negotiate or exchange state dynamically—MCP can even sit underneath if those A2A messages map to function calls.

Andrea lob asked:

How does Claude, in this particular case, invoke that particular “function”? Are there metadata describing capabilities?

Before dialogue starts, Claude downloads the MCP registry, sees each function’s name, description, JSON schema and version. When it generates a function call, it picks the schema that best matches the intent and fills in the parameters—it’s identical to today’s “function calling” but standardized across vendors.

Seyyed Omid Badretale asked:

How does Claude know that it needs to use MCP to get the answer and not use its trained data?

Claude treats MCP tools as “external knowledge sources.” If the prompt or system message signals that a function exists for a given intent, the model picks that over hallucinating or referencing its internal weights—especially if you disable free-text responses for certain intents.

3. Authentication & Security in MCP

Jake Sangil Jeong asked:

I agree with all the advantages of MCP, but could you share all the disadvantages of MCP in the real-world we need to factor in such as security/compatibility/testing?

In practice, you’ll manage an extra orchestration layer (adds latency), enforce schema compatibility (requires contract tests), and lock down access (you must secure your MCP endpoint as you would any API). It’s more moving parts, but you get predictable, type-safe calls in return.

Emanuel C asked:

How are access credentials, session management, and related concerns typically handled in an architecture where the data flow goes through MCPs connected to services that require authentication?

Each tool registration includes its auth config (OAuth tokens, API keys, certs). The MCP server keeps them in a secure vault, injects them per call, and enforces session timeouts or token-rotation policies. Your API gateway still handles perimeter security.

Austin Born asked:

How should engineering teams think about the security around MCP servers, since they are usually open source?

Treat your MCP endpoint like any internal service: put it behind mTLS, enforce JWT or OAuth on incoming requests, lock down IPs, and monitor logs for anomalous invocations.

Ali Avci asked:

We keep hearing about MCP servers having security breaches. Is MCP server authentication in a reliable state at the moment?

Yes—most mature MCP implementations now support OIDC/OAuth2, fine-grained scopes per tool, audit logging and automated token rotation. The best practice is to run it behind your corporate SSO and secret-management system.

The Cee asked:

MCP’s RPC framing is neat, but in production I embed symbolic rule-validation, live token-budget gating, and provenance metadata directly in the orchestration layer—catching errors before any model call and removing multi-hop RPC overhead. How do you fold verification and audit inline?

You can insert middleware in your MCP router that validates each payload against its JSON schema, checks a token budget counter, stamps provenance metadata, and either rejects invalid calls or routes them to a sandbox. It’s just another layer in your pipeline.

4. Testing & Failure Handling in MCP

Shwetha Harsha asked:

The use cases and advantages of MCP are numerous. I wanted to know how the failure cases are handled.

Every MCP invocation has an idempotency_key so retries won’t duplicate actions. You also get structured error types from the schema (e.g. ValidationError, AuthError), letting your client decide to retry, fallback, or prompt the user.

5. Standards, Versioning & History

The Cee asked:

You’ve framed MCP as a ‘standard’—can you share which formal body governs it, how versioning and compliance are managed, and what ensures true cross-vendor interoperability rather than it becoming a proprietary pitch?

MCP is an open source community collaboration project headed by Anthropic. MCP uses date-based versioning (e.g., 2025-03-26), ensuring compatibility by only incrementing versions when introducing breaking changes, similar to semantic versioning. Clients negotiate minimum compatible versions at startup to avoid breakage.

6. Real-Time & Streaming capabilities of MCP

Carl Cunningham asked:

How does MCP do with real-time, i.e. audio/video streaming applications?

MCP supports real-time streaming via WebSockets or gRPC, enabling bidirectional, low-latency interactions for audio/video or continuous data streams. You open a bidirectional stream, chunk audio or video frames into invoke calls, and receive transcriptions or analysis frames back in real time—complete with progress updates.

7. Deployment, Scaling & Billing in MCP

Aaron Herhoidt asked:

Is it best to have an MCP run on the internet? Or locally?

Run locally (on your private network) if you’re dealing with sensitive data or need low latency. Public internet deployment works for SaaS providers, as long as you secure it with mTLS, WAFs and IP allow-lists.

Juan Salas asked:

Does communication between client and server run over HTTP?

By default we use HTTP/1.1+JSON, but you can also wire MCP over WebSockets or gRPC—same messages, different transport.

Jonathan Black asked:

Will MCPs be limited in the same way API calls are limited by companies? Will they have to set up different systems to limit through MCP?

You’ll reuse your existing API gateway or rate-limiter. MCP doesn’t impose its own quotas—you simply meter calls by tool name in your gateway and apply the same throttles you’d apply to REST.

Rex Briggs asked:

Can I charge when my tool is used by an agent via MCP? How would that work?

Yes—you include usage metadata (tool name, compute time, payload size) in each MCP envelope. Your billing system can read those logs and apply per-tool rate cards or pay-per-call pricing just like a normal API.

8. Data-Access & RAG + MCP

Paul Giles asked:

Adding websites or documents as “knowledge” is easy. Our data is in databases like Mongo, MySQL, BigQuery, Cassandra, etc. Assuming I have credentials to these repositories, does RAG + MCP or other agentic AI tools solve for me chatting with this data?

Definitely. You write a “retriever” MCP tool that takes a query, hits your database or vector store, and returns relevant snippets. Then you write a “qa_over_docs” tool that the model calls with those snippets. Credentials, batching and rate-limits live inside your adapter. Voila—you can chat naturally over your production data.

Next Steps:

- View our MCP Docs.

- No-Code MCP Server w/ your data

Priyansh is Developer Relations Advocate who loves technology, writer about them, creates deeply researched content about them.