MCP AMA recording LINK – https://www.youtube.com/watch?v=hZWPAuBzmVs

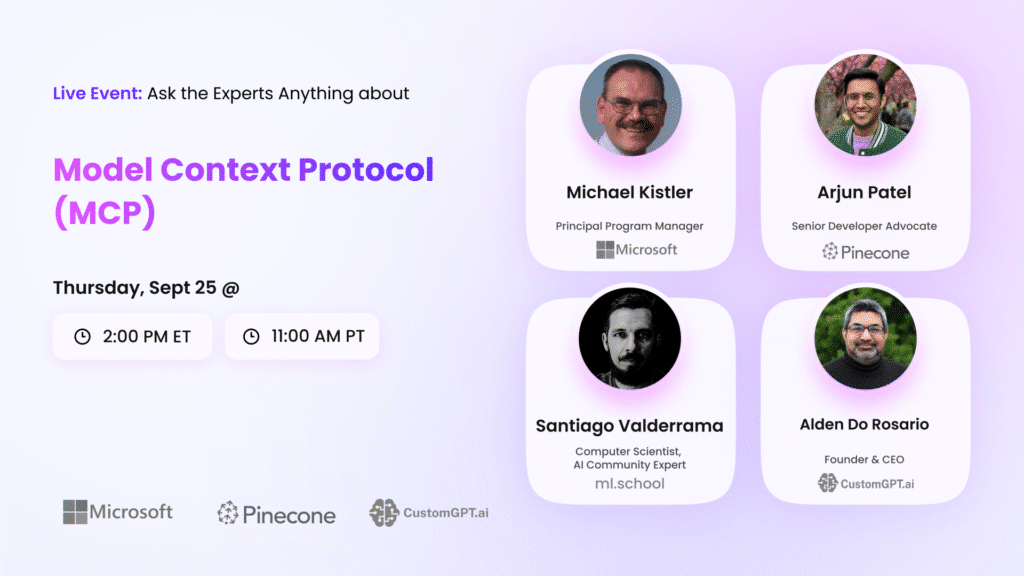

Our recent MCP AMA brought together a stellar panel of experts from across the AI ecosystem—spanning academia, major tech players, infrastructure providers, and applied AI startups. Moderated by Bill Cava (CEO of Generative Labs and adviser to CustomGPT.ai), the conversation featured:

- Santiago – Computer scientist and educator at ML.School

- Mike Kistler – From Microsoft, maintaining the official C# MCP SDK

- Arjun – Developer Advocate at Pinecone

- Alden Do Rosario – CEO of CustomGPT.ai

The discussion was a deep dive into the Model Context Protocol (MCP): what it is, why it matters, and how it’s already transforming the way AI agents connect with tools and data. This recap captures the key insights from the discussion, including technical fundamentals, live demos, real-world implementations, and forward-looking perspectives.

What Is MCP and Why It Matters

Santiago, a computer scientist and educator at ML.School, kicked things off with the big picture. He explained the pain of today’s AI ecosystem: every application needs to hardcode integrations with every external API. That means brittle code, maintenance headaches, and a scaling problem that grows as an M×N explosion.

MCP fixes this by standardizing the way AI agents interact with external tools. Instead of bespoke connectors, developers just implement the protocol once. Every MCP server exposes its capabilities in a way that any MCP-compatible client can understand.

Key takeaways from Santiago’s talk:

- MCP shifts the integration model from M×N to M+N, dramatically simplifying connections.

- It’s more than just tool calling: MCP also supports resources, prompt templates, elicitation (clarifying questions to users), and even sampling (delegating queries back to the local LLM).

- Everyone wins: developers avoid fragile integrations, tool builders gain instant distribution, and end-users benefit from smarter, more capable AI agents.

Building with the MCP SDK in C#

Next, Mike Kistler from Microsoft took us deeper into implementation. Microsoft maintains the official C# MCP SDK, already being tested across products like Copilot Studio and even considered for Xbox.

Highlights from Mike’s session:

- The SDK tracks the evolving spec closely (currently version 0.3.0-preview4, with 0.4.0-preview1 on the horizon).

- Supported features include tools, resources, prompts, authentication (OAuth 2.1), elicitation, sampling, and event notifications.

- Two transports are available: standard I/O (for local tools) and streamable HTTP (for remote servers).

Mike then live-demoed creating a minimal MCP server in C# using .NET 10. Within minutes, he spun up a random-number generator MCP server, plugged it into VS Code, and showed it responding to tool calls in GitHub Copilot. The demo illustrated how simple it can be to publish, install, and test new MCP servers.

Vector Databases Meet MCP: Pinecone’s Approach

Arjun, Developer Advocate at Pinecone, shared how their vector database platform is embracing MCP. Pinecone has launched three MCP servers:

- Developer MCP – gives developers index-level access to Pinecone directly in IDEs like VS Code, with tools for listing indexes, upserting data, querying, and searching documentation.

- Assistant MCP (local) – wraps Pinecone’s no-code RAG assistant into an MCP server, letting applications ground LLM outputs in pre-uploaded documents without complex pipelines.

- Assistant MCP (remote) – enables production-ready assistants to be accessed programmatically.

Key benefits:

- Rapid prototyping and debugging within IDEs.

- No-code/low-code creation of RAG-powered assistants.

- Seamless grounding of AI agents in private data via the context API.

Limitations today: Developer MCP is not designed for heavy upserts (millions of vectors), and embedding options are currently limited to Pinecone-hosted inference models (including Microsoft’s multilingual E5 and NVIDIA’s Llama-based models).

Business Applications: CustomGPT.ai and MCP

Finally, Alden Do Rosario, CEO of CustomGPT.ai, presented how MCP fits into their no-code platform. CustomGPT is already known for its hallucination-free RAG solution, benchmarked against industry leaders for accuracy. With MCP, they’re making this power easily accessible inside any agent environment.

Key announcements:

- CustomGPT projects can now be instantly exposed as MCP servers. Non-technical users can upload documents, connect data sources (Google Drive, SharePoint, websites, YouTube, etc.), and generate a production-ready MCP endpoint in under five minutes.

- CustomGPT is also adding MCP client capabilities. This means users can plug in other MCP servers (e.g., HubSpot, email, CRM systems) directly into their CustomGPT agent, enabling complex workflows like sending emails or updating tickets—no code required.

Alden framed MCP as “USB for AI agents”: a simple, universal connector that could unlock the same kind of ecosystem growth HTTP did for the web.

Audience Q&A Highlights

The session ended with a lively Q&A. Here are some of the most important insights:

- Is MCP stable? Yes, it’s still evolving, but with broad support from Anthropic, Microsoft, OpenAI, Google, and Pinecone, momentum is strong. No competing standards are on the horizon.

- Security and authentication? MCP supports OAuth 2.1 and emphasizes keeping humans in the loop for tool calls. The spec includes a detailed “security best practices” section developers should review.

- Tool selection quality? Dependent on the LLM’s reasoning and the number of tools exposed. Best practice: start small, validate tool use, then expand gradually.

- Performance tradeoffs? MCP reduces latency versus letting LLMs “figure out” APIs via free-form text. But chaining too many MCP calls can introduce lag. Developers should balance consistency by minimizing reliance on LLM-driven orchestration where code can suffice.

- Future possibilities? Panelists envisioned agent-to-agent communication, tool registries (like DNS for MCP servers), and natural language becoming the default interface for applications.

Answer to questions we were not able to address during the AMA.

1) Getting started (no-code) & “marketplaces”

Q1. I’m not a coder. Is there a smart MCP tool that does this for me?

Yes. Two practical routes today:

- CustomGPT.ai No-Code Hosted MCP Server – LINK

- Point-and-click “assistant” → MCP: Pinecone’s Assistant gives you a hosted RAG assistant that already exposes an MCP server endpoint; you copy the endpoint into a client (Cursor, Claude Desktop, etc.). This is explicitly documented (currently early access). (Pinecone Docs)

- Install-ready MCP in clients: Tools like Cursor document how to install servers and wire up auth without writing code. (Cursor)

(If you’re using CustomGPT.ai already: exposing your project as an MCP server or adding third-party MCP servers can be done in a few clicks inside your project—no coding; that’s exactly the “USB for agents” idea you discussed in the AMA.)

Q2. Is there a list of open-source projects that support MCP?

There’s no single canonical list, but these are good, community-maintained directories:

- Anthropic’s Example Clients page (official listing of clients that speak MCP). (Model Context Protocol)

- GitHub “Awesome” lists of servers (curated, open-source): (GitHub)

- The modelcontextprotocol/servers GitHub org (many OSS servers). (GitHub)

- Third-party directories/“markets” (use judgment; quality varies): (MCP Market)

Q4. Is there an open marketplace for MCP agents?

Not an “official” one. There are community markets/directories (MCP Market, Cursor directories, etc.), but they’re not vetted and vary in quality and security posture—treat them like you would any third-party plugin store. (MCP Market)

Q5. Some providers (e.g., Google Drive) don’t offer an official MCP. Can we still create one (e.g., in CustomGPT) and how secure is it?

Yes. Any vendor API (Google Drive, etc.) can be wrapped behind your own MCP server that uses OAuth to call the vendor API. Security depends on your implementation:

- Use OAuth 2.1 flows as defined in the MCP Authorization spec for HTTP transport; apply least-privilege scopes (e.g., read-only if that’s all you need). (Model Context Protocol)

- Follow MCP Security Best Practices (human-in-the-loop consent, clear tool descriptions, rate limits, audit logs, data-minimization). (Model Context Protocol)

- Prefer a mature OAuth provider / library (several vendors document drop-in approaches for MCP). (Cloudflare Docs)

2) Ecosystem & adoption

Q5. What’s the chance MCP becomes a real industry standard? Are the majors on board?

MCP is open and already has visible support: Anthropic developed it, Microsoft maintains an official C# SDK (with auth support), and Pinecone provides Assistant MCP servers. Many IDEs/clients have added MCP. That’s encouraging, but it’s still maturing. (Anthropic)

3) Architecture & positioning (MCP vs REST; host/client)

Q6. Will MCP replace REST for microservice communication?

No—these solve different problems.

- MCP: a tool/agent interface (JSON-RPC over stdio or streamable HTTP) for LLM agents to call capabilities securely with human consent. (Model Context Protocol)

- REST/gRPC/Kafka/etc.: service-to-service comms optimized for reliability, latency, typing, and scale inside your platform.

Most teams will keep REST/gRPC for microservices and expose selected workflows via MCP so agents can safely perform tasks. Think “MCP on the edge of your system,” not a replacement for your internal mesh.

Q7. How does the MCP host select an MCP client? What does that interaction look like?

Terminology:

- Host: the app embedding the LLM chat (e.g., Cursor, Claude Desktop).

- Client: the embedded MCP client inside the host that talks to servers.

- Server: the tool provider.

In practice, the host loads whichever MCP client(s) it ships with, and you configure servers (via config or UI). The host advertises those servers’ tools/resources/prompts to the model, which then selects tools at runtime. Discovery across hosts isn’t standardized—you configure what you trust. See the spec’s transport and example clients pages for how hosts wire MCP in.

4) Security & risk management

Q8, Q9, Q10, Q11. How do you prevent risks (sensitive data exposure, tool poisoning, “if we add everything anyone can access,” etc.)? What are the must-do steps?

Use a layered approach, aligned to the MCP Security Best Practices:

- Human-in-the-loop & consent

Hosts must obtain explicit user approval before invoking tools or sending prompts (sampling). Make tool descriptions specific so users understand data flows. (Model Context Protocol) - Least privilege & scoping

- OAuth 2.1 with narrow scopes; separate prod/test tenants; per-user tokens; short TTL refresh tokens. (Model Context Protocol)

- On your MCP server, access controls by user/role/project; deny by default.

- Egress controls & data minimization

- Strip or mask sensitive fields before returning to the LLM.

- Prefer returning handles/links over raw data when feasible. (Model Context Protocol)

- Prompt-injection & tool-poisoning mitigations

- Treat all retrieved content as untrusted; sanitize/validate before using outputs to call tools.

- Use allow-lists for tool routing; avoid forwarding arbitrary text to powerful actions. (Model Context Protocol)

- Isolation & sandboxing

- Run risky servers in separate processes / containers; separate secrets per server; don’t share API keys across tenants. (Model Context Protocol)

- Run risky servers in separate processes / containers; separate secrets per server; don’t share API keys across tenants. (Model Context Protocol)

- Logging, audit, and rate limiting

- Log tool invocations, inputs/outputs (with redaction), user approvals, and errors.

- Rate limit and throttle servers to reduce blast radius. (Model Context Protocol)

- Supply-chain hygiene

- Pin server versions; review code for community MCP servers before deployment.

- Prefer servers from trusted orgs; verify URLs and TLS (HTTP transport).

If you’re wrapping third-party APIs (e.g., Drive), lean on battle-tested OAuth infrastructure (IdP or managed libraries) rather than inventing your own. (Cloudflare Docs)

5) Performance, latency, large results, and consistency

Q12. What are the performance/latency/consistency trade-offs?

- Faster than “let the LLM browse”: Declaring tools via MCP typically beats ad-hoc browsing or multi-step scraping.

- Every hop adds latency: Avoid deep tool-chains (tool → tool → tool).

- Consistency: Keep deterministic steps in code; use LLMs for selection, not for critical arithmetic or schema munging.

- Transports: For remote servers, streamable HTTP reduces time-to-first-byte and supports streaming results to the host. (Model Context Protocol)

Q13. A tool returns a large dataset (e.g., 1,000 rows). What should we do?

- Prefer pagination / cursoring at the server; return page handles, not full dumps.

- Summarize or aggregate server-side (top-K, totals, histograms).

- Return references (resource URIs) that the host can fetch on demand.

- Use streaming HTTP so the LLM can start reasoning before the full payload arrives.

Q14. If I publish a public MCP server, how do I run it (logging, monitoring, limits)?

Treat it like any internet-facing API service:

- AuthN/Z per MCP Authorization spec (OAuth 2.1). (Model Context Protocol)

- Observability: structured logs, metrics, traces; 4xx/5xx, latency SLOs.

- Protection: rate limiting, WAF, abuse detection, input validation.

- Ops: versioning, deprecation policy, error budgets, health checks.

For .NET, the official C# SDK integrates cleanly with ASP.NET Core’s logging/metrics pipelines. Equivalent stacks exist in other languages. (GitHub)

6) Data & retrieval choices

Q15. I’m storing long documents in MongoDB and auto-generating NoSQL queries, but results are poor. Should I switch to Pinecone for long-form docs?

If your queries are semantic (“What’s the policy if X happens?”), a vector store (Pinecone, pgvector, Weaviate, etc.) with chunking + embeddings + hybrid search + re-ranking will outperform ad-hoc NoSQL queries generated by an LLM. Pinecone’s Assistant MCP can hide the whole pipeline for you (ingestion, chunking, retrieval, re-ranking). (Pinecone Docs)

If your queries are exact filters (“status:active since 2023-10-01”), keep MongoDB—but feed results through retrieval/rerank for better grounding. Many teams run both: operational DB for filters, vector DB for semantic recall.

7) Robotics & MCP

Q16. How will we connect humanoid robots—will they each be an MCP server?

Likely both:

- Real-time control (millisecond loops, PID, safety interlocks): use robotics middleware (e.g., ROS 2/ros2_control, DDS QoS) and/or gRPC/WebRTC; MCP is not a real-time control bus. (control.ros.org)

- High-level tasks (“Pick up the box”, “read sensor logs, plan route”): expose safe, parameterized actions as MCP tools on the robot or a gateway, so an agent can plan and get human approval before performing operations. (Many robots already expose JSON-RPC/gRPC; MCP is the agent-facing wrapper.) (viam.com)

8) Operating many MCP servers

Q17. What’s the best way to manage all your MCP servers?

- Catalog/registry: maintain an internal directory (name, owner, scopes, env, version, change log).

- Environments: separate dev/stage/prod endpoints and credentials.

- Policy: approval workflow for adding a server to a host; least-privilege scopes; expiration/rotation.

- Monitoring: central logs + metrics per server; error budgets; synthetic pings.

- Versioning: semantic versions, deprecations, and migration notes.

Most of this aligns with the spec’s security/ops guidance; treat MCP servers like public APIs with stricter consent UX. (Model Context Protocol)

9) “If we add everything, can anyone access it?”

Q18–Q19 (combined).

Adding more servers increases attack surface and context confusion. Concrete guardrails:

- Only add trusted servers; review code or vendor security docs.

- Enforce per-user consent for each tool call; show clear, human-readable tool descriptions and what data flows where. (Model Context Protocol)

- Use allow-lists for which servers can be loaded in each host/workspace; disable by default.

- Separate tenants and secrets; audit all calls; rate limit. (Model Context Protocol)

Sources & further reading

- MCP specification (core, transports, authorization, security best practices). (Model Context Protocol)

- Anthropic: Introducing MCP. (Anthropic)

- Official C# SDK (maintained with Microsoft; releases & docs). (GitHub)

- Pinecone Assistant MCP (docs & blog). (Pinecone Docs)

- Clients/Directories: Example Clients (official), Awesome MCP servers (community), Cursor docs. (Model Context Protocol)

- CustomGPT.ai Hosted MCP Server.

Closing Thoughts: MCP’s Future

Across all four speakers, one theme was clear: MCP is more than a protocol—it’s an ecosystem enabler.

- Alden compared it to HTTP for agents.

- Mike likened it to a natural-language-first successor to OpenAPI.

- Arjun highlighted easier human review and trust in tool calls.

- Santiago envisioned autonomous agents that can discover and securely select tools from trusted registries.

The consensus: MCP is still early, but adoption is accelerating quickly. If yesterday’s AMA is any indication, we’re witnessing the foundation of the next era of AI applications.

✦ Want to dive deeper? The full MCP specification and resources are available on GitHub. Stay tuned—CustomGPT.ai will also be publishing a follow-up blog post addressing all unanswered AMA questions in detail.

Would you like me to polish this into a polished blog-ready markdown file with headings, links, and inline images placeholders, so you can publish it directly on your site?

Priyansh is Developer Relations Advocate who loves technology, writer about them, creates deeply researched content about them.