TL;DR

- Use RAG evaluation metrics like RAG Triad—Context Relevance, Faithfulness, and Answer Relevance—as your core evaluation framework.

- RAGAS provides production-ready implementations of these metrics using LLM-as-a-judge approaches.

- For enterprise applications, combine automated metrics with human evaluation on 100-200 representative queries.

- Focus on Faithfulness (hallucination detection) and Context Precision for customer-facing applications.

Building a RAG system is straightforward—building one that consistently delivers accurate, relevant responses is not. Without proper evaluation metrics, you’re flying blind, unable to distinguish between minor improvements and system-breaking regressions.

Traditional NLP metrics like BLEU and ROUGE miss the nuanced requirements of RAG systems: factual accuracy, context utilization, and answer relevance.

This guide provides a comprehensive framework for measuring and optimizing RAG performance using both automated metrics and human evaluation strategies that actually correlate with user satisfaction.

The RAG Evaluation Challenge

Why Traditional Metrics Fall Short

BLEU and ROUGE Limitations:

- Surface-level comparison: Focus on n-gram overlap, not semantic correctness

- No factual grounding: Can’t detect hallucinations or factual errors

- Context blindness: Don’t evaluate how well retrieved context is utilized

- Reference dependency: Require human-written “gold standard” answers

RAG-Specific Requirements:

- Factual consistency: Generated answers must be grounded in retrieved context

- Retrieval quality: Measuring whether the right documents were found

- Context utilization: How effectively the LLM uses provided information

- Answer completeness: Whether responses fully address the query

The RAG Evaluation Framework

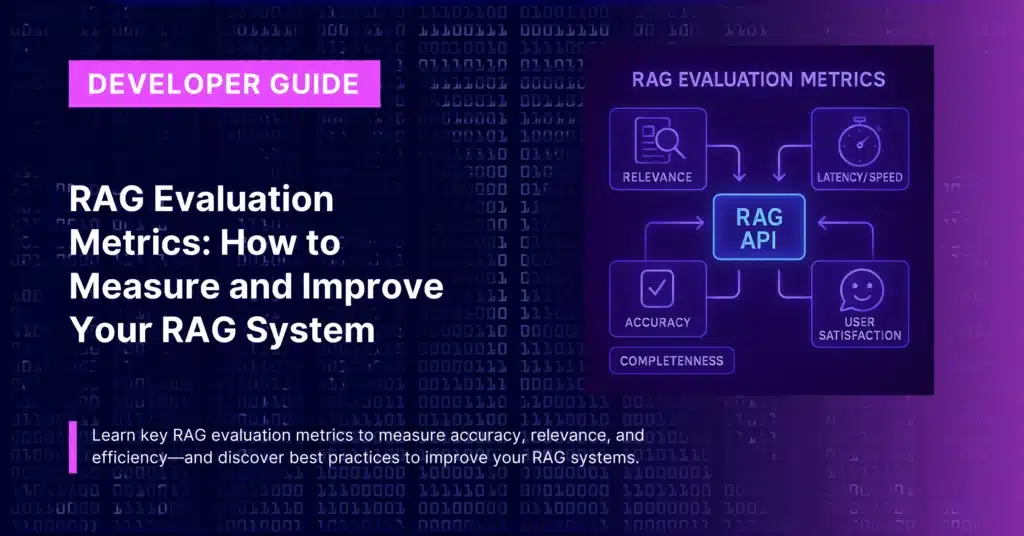

Modern RAG evaluation focuses on three core dimensions, known as the RAG Triad:

- Context Relevance: Did we retrieve the right information?

- Faithfulness/Groundedness: Is the answer supported by the retrieved context?

- Answer Relevance: Does the response actually answer the question?

Core RAG Evaluation Metrics

1. Context Relevance (Retrieval Quality)

Measures whether retrieved documents contain information relevant to answering the query.

Implementation with RAGAS:

from ragas.metrics import context_relevancy

from ragas import evaluate

import pandas as pd

# Evaluation data structure

eval_data = {

'question': ["What are the health benefits of meditation?"],

'contexts': [["Meditation reduces stress hormones and improves focus."]],

'answer': ["Meditation provides several health benefits including stress reduction."]

}

dataset = Dataset.from_dict(eval_data)

result = evaluate(dataset, metrics=[context_relevancy])

print(f"Context Relevance Score: {result['context_relevancy']}")Manual Implementation:

def calculate_context_relevance(query, contexts, llm_evaluator):

"""Calculate what percentage of retrieved contexts are relevant"""

relevant_count = 0

for context in contexts:

prompt = f"""

Query: {query}

Context: {context}

Is this context relevant for answering the query?

Answer only 'Yes' or 'No'.

"""

response = llm_evaluator.generate(prompt).strip().lower()

if response == 'yes':

relevant_count += 1

return relevant_count / len(contexts) if contexts else 0Optimal Ranges:

- Excellent: >0.9 (90%+ of retrieved docs are relevant)

- Good: 0.7-0.9 (70-90% relevance)

- Poor: <0.5 (More noise than signal)

2. Context Precision

Measures whether relevant documents appear early in the retrieval results.

Why It Matters:

- Early results have more influence on LLM generation

- Users expect the most relevant information first

- Impacts both accuracy and user trust

Implementation:

def context_precision(query, contexts, ground_truth_context):

"""Calculate precision at different cutoff points"""

relevance_scores = []

for i, context in enumerate(contexts):

# Check if context contains relevant information

is_relevant = check_relevance(context, ground_truth_context)

relevance_scores.append(is_relevant)

# Calculate precision at position i+1

precision_at_k = sum(relevance_scores) / (i + 1)

# Average precision across all positions

return sum(precision_at_k for precision_at_k in relevance_scores) / len(contexts)3. Context Recall

Measures whether all relevant information needed to answer the query was retrieved.

RAGAS Implementation:

from ragas.metrics import context_recall

# Note: Context recall requires ground truth answers

eval_dataset = Dataset.from_dict({

'question': ["What causes climate change?"],

'contexts': [["CO2 emissions from fossil fuels are the primary driver."]],

'ground_truths': [["Climate change is caused by greenhouse gas emissions, primarily CO2 from burning fossil fuels."]]

})

result = evaluate(eval_dataset, metrics=[context_recall])

print(f"Context Recall: {result['context_recall']}")Production Alternative (No Ground Truth Required):

def estimate_context_recall(query, retrieved_contexts, llm_evaluator):

"""Estimate recall by asking LLM if answer can be fully constructed"""

combined_context = "\n".join(retrieved_contexts)

prompt = f"""

Query: {query}

Available Context: {combined_context}

Based on this context, can you provide a complete answer to the query?

Rate completeness from 1-5:

1: Very incomplete, major information missing

5: Complete, all necessary information present

Score:

"""

response = llm_evaluator.generate(prompt)

score = extract_score(response) # Extract numeric score

return score / 5.0 # Normalize to 0-14. Faithfulness/Groundedness

The most critical metric for production RAG systems—measures whether the generated answer is supported by the retrieved context.

RAGAS Faithfulness:

from ragas.metrics import faithfulness

eval_data = {

'question': ["What is the capital of France?"],

'answer': ["The capital of France is Paris, located in the north of the country."],

'contexts': [["Paris is the capital and largest city of France."]]

}

dataset = Dataset.from_dict(eval_data)

result = evaluate(dataset, metrics=[faithfulness])

print(f"Faithfulness Score: {result['faithfulness']}")Custom Faithfulness Implementation:

def calculate_faithfulness(answer, contexts, llm_evaluator):

"""Break answer into statements and check each against context"""

# Step 1: Extract claims from the answer

claims_prompt = f"""

Extract individual factual claims from this answer:

"{answer}"

Return each claim on a separate line.

"""

claims_response = llm_evaluator.generate(claims_prompt)

claims = [claim.strip() for claim in claims_response.split('\n') if claim.strip()]

# Step 2: Check each claim against context

supported_claims = 0

combined_context = " ".join(contexts)

for claim in claims:

verification_prompt = f"""

Context: {combined_context}

Claim: {claim}

Is this claim supported by the context? Answer 'Yes' or 'No'.

"""

verification = llm_evaluator.generate(verification_prompt).strip().lower()

if verification == 'yes':

supported_claims += 1

return supported_claims / len(claims) if claims else 1.05. Answer Relevance

Measures how directly the generated response addresses the original query.

RAGAS Implementation:

from ragas.metrics import answer_relevancy

# Answer relevance is calculated by generating questions from the answer

# and measuring similarity to the original question

result = evaluate(dataset, metrics=[answer_relevancy])

print(f"Answer Relevance: {result['answer_relevancy']}")Custom Implementation:

def calculate_answer_relevance(query, answer, llm_evaluator):

"""Generate potential questions from answer and compare to original"""

# Generate questions that this answer would address

question_gen_prompt = f"""

Given this answer: "{answer}"

Generate 3 questions that this answer would appropriately address.

List them one per line.

"""

generated_questions = llm_evaluator.generate(question_gen_prompt)

questions_list = [q.strip() for q in generated_questions.split('\n') if q.strip()]

# Calculate semantic similarity between original and generated questions

similarities = []

for gen_question in questions_list:

similarity = calculate_semantic_similarity(query, gen_question)

similarities.append(similarity)

return max(similarities) if similarities else 0Advanced Evaluation Metrics

6. Answer Correctness

Combines factual accuracy with semantic similarity to ground truth.

def answer_correctness(predicted_answer, ground_truth, contexts):

"""Weighted combination of factual accuracy and semantic similarity"""

# Factual accuracy component

factual_score = calculate_faithfulness(predicted_answer, contexts)

# Semantic similarity component

semantic_score = calculate_semantic_similarity(predicted_answer, ground_truth)

# Weighted combination (adjust weights based on use case)

return 0.7 * factual_score + 0.3 * semantic_score7. Response Completeness

Measures whether the answer fully addresses all aspects of a complex query.

def response_completeness(query, answer, llm_evaluator):

"""Check if answer addresses all query components"""

analysis_prompt = f"""

Query: {query}

Answer: {answer}

Does the answer fully address all aspects of the query?

Consider:

1. Are all question components answered?

2. Is the level of detail appropriate?

3. Are any important aspects missing?

Rate completeness from 1-5:

"""

response = llm_evaluator.generate(analysis_prompt)

score = extract_score(response)

return score / 5.08. Citation Accuracy

For RAG systems that provide source citations, measure citation quality.

def citation_accuracy(answer, citations, contexts):

"""Verify that citations actually support the claims they're attached to"""

accurate_citations = 0

total_citations = len(citations)

for citation in citations:

# Extract the claim associated with this citation

claim = extract_claim_for_citation(answer, citation)

# Check if the cited source supports the claim

cited_context = contexts[citation.source_index]

if claim_supported_by_context(claim, cited_context):

accurate_citations += 1

return accurate_citations / total_citations if total_citations > 0 else 1.0Production Evaluation Pipeline

Automated Evaluation Framework

class ProductionRAGEvaluator:

def __init__(self, config):

self.evaluator_llm = initialize_evaluator_llm(config['evaluator_model'])

self.metrics = [

'context_relevance',

'faithfulness',

'answer_relevance',

'context_precision'

]

def evaluate_query(self, query, contexts, answer, ground_truth=None):

"""Evaluate a single query-answer pair"""

results = {}

# Context-based metrics

results['context_relevance'] = self.calculate_context_relevance(query, contexts)

results['context_precision'] = self.calculate_context_precision(query, contexts, ground_truth)

# Answer-based metrics

results['faithfulness'] = self.calculate_faithfulness(answer, contexts)

results['answer_relevance'] = self.calculate_answer_relevance(query, answer)

# Optional ground truth metrics

if ground_truth:

results['answer_correctness'] = self.calculate_answer_correctness(answer, ground_truth, contexts)

return results

def batch_evaluate(self, evaluation_dataset):

"""Evaluate multiple queries in batch"""

all_results = []

for item in evaluation_dataset:

try:

result = self.evaluate_query(

item['query'],

item['contexts'],

item['answer'],

item.get('ground_truth')

)

all_results.append(result)

except Exception as e:

print(f"Evaluation failed for query: {item['query'][:50]}... Error: {e}")

continue

return self.aggregate_results(all_results)

def aggregate_results(self, results):

"""Calculate aggregate metrics across all queries"""

aggregated = {}

for metric in self.metrics:

scores = [r[metric] for r in results if metric in r]

if scores:

aggregated[metric] = {

'mean': np.mean(scores),

'median': np.median(scores),

'std': np.std(scores),

'min': np.min(scores),

'max': np.max(scores)

}

return aggregatedContinuous Evaluation in Production

class ContinuousEvaluator:

def __init__(self, sample_rate=0.1):

self.sample_rate = sample_rate

self.evaluator = ProductionRAGEvaluator(config)

def should_evaluate(self, query):

"""Determine if this query should be evaluated"""

# Sample queries for evaluation

if random.random() > self.sample_rate:

return False

# Always evaluate high-stakes queries

if self.is_high_stakes_query(query):

return True

return True

def evaluate_production_query(self, query, contexts, answer):

"""Evaluate a production query asynchronously"""

if not self.should_evaluate(query):

return

# Run evaluation in background

evaluation_task = {

'timestamp': time.time(),

'query': query,

'contexts': contexts,

'answer': answer

}

# Queue for async processing

self.evaluation_queue.put(evaluation_task)

def process_evaluation_queue(self):

"""Background process to handle evaluations"""

while True:

try:

task = self.evaluation_queue.get(timeout=10)

result = self.evaluator.evaluate_query(

task['query'],

task['contexts'],

task['answer']

)

# Store results for analysis

self.store_evaluation_result(task, result)

except queue.Empty:

continue

except Exception as e:

logger.error(f"Evaluation processing failed: {e}")Human Evaluation Strategies

Representative Test Set Creation

def create_evaluation_dataset(production_queries, size=200):

"""Create a balanced evaluation dataset"""

# Categorize queries by complexity

simple_queries = [q for q in production_queries if is_simple_query(q)]

medium_queries = [q for q in production_queries if is_medium_complexity(q)]

complex_queries = [q for q in production_queries if is_complex_query(q)]

# Sample proportionally

dataset = []

dataset.extend(random.sample(simple_queries, size // 3))

dataset.extend(random.sample(medium_queries, size // 3))

dataset.extend(random.sample(complex_queries, size // 3))

# Add edge cases

dataset.extend(get_edge_case_queries())

return dataset[:size]Human Evaluation Interface

class HumanEvaluationInterface:

def create_evaluation_task(self, query, contexts, answer):

"""Create standardized evaluation task for human reviewers"""

return {

'query': query,

'retrieved_contexts': contexts,

'generated_answer': answer,

'evaluation_criteria': {

'accuracy': 'Is the answer factually correct?',

'completeness': 'Does it fully answer the question?',

'clarity': 'Is the response clear and well-structured?',

'citations': 'Are sources properly referenced?'

},

'scoring_guide': {

'5': 'Excellent - Exceeds expectations',

'4': 'Good - Meets expectations',

'3': 'Average - Acceptable with minor issues',

'2': 'Poor - Has significant problems',

'1': 'Unacceptable - Major errors or irrelevant'

}

}

def calculate_inter_annotator_agreement(self, annotations):

"""Measure consistency between human evaluators"""

# Calculate Cohen's kappa or similar metric

passEvaluation Best Practices

1. Baseline Establishment

def establish_baselines(evaluation_dataset):

"""Create baseline metrics for comparison"""

baselines = {}

# Random baseline: random selection from knowledge base

baselines['random'] = evaluate_random_retrieval(evaluation_dataset)

# BM25 baseline: keyword-based retrieval

baselines['bm25'] = evaluate_bm25_system(evaluation_dataset)

# Embedding-only baseline: semantic similarity without reranking

baselines['embedding_only'] = evaluate_embedding_system(evaluation_dataset)

return baselines2. Regression Testing

class RegressionTestSuite:

def __init__(self, critical_queries):

self.critical_queries = critical_queries

self.baseline_scores = None

def set_baseline(self, rag_system):

"""Establish baseline performance"""

self.baseline_scores = {}

for query_id, query_data in self.critical_queries.items():

result = rag_system.query(query_data['query'])

score = self.evaluate_result(result, query_data)

self.baseline_scores[query_id] = score

def run_regression_test(self, updated_rag_system, threshold=0.05):

"""Check for performance regressions"""

regressions = []

for query_id, query_data in self.critical_queries.items():

result = updated_rag_system.query(query_data['query'])

new_score = self.evaluate_result(result, query_data)

baseline_score = self.baseline_scores[query_id]

if new_score < baseline_score - threshold:

regressions.append({

'query_id': query_id,

'baseline_score': baseline_score,

'new_score': new_score,

'degradation': baseline_score - new_score

})

return regressions3. A/B Testing Framework

class RAGABTestFramework:

def __init__(self, control_system, test_system):

self.control = control_system

self.test = test_system

self.results = {'control': [], 'test': []}

def run_ab_test(self, test_queries, user_feedback=False):

"""Compare two RAG systems"""

for query in test_queries:

# Randomly assign to control or test

system = random.choice(['control', 'test'])

rag_system = self.control if system == 'control' else self.test

# Generate response

result = rag_system.query(query)

# Evaluate result

evaluation = self.evaluate_response(query, result)

# Collect user feedback if enabled

if user_feedback:

evaluation['user_rating'] = self.collect_user_feedback(result)

self.results[system].append(evaluation)

def analyze_results(self):

"""Statistical analysis of A/B test results"""

control_scores = [r['overall_score'] for r in self.results['control']]

test_scores = [r['overall_score'] for r in self.results['test']]

# Statistical significance test

t_stat, p_value = stats.ttest_ind(control_scores, test_scores)

return {

'control_mean': np.mean(control_scores),

'test_mean': np.mean(test_scores),

'improvement': (np.mean(test_scores) - np.mean(control_scores)) / np.mean(control_scores),

'p_value': p_value,

'statistically_significant': p_value < 0.05

}Domain-Specific Evaluation

Legal Document RAG

def legal_rag_evaluation(query, answer, contexts, legal_evaluator):

"""Specialized evaluation for legal RAG systems"""

metrics = {}

# Legal accuracy - check citations and precedents

metrics['citation_accuracy'] = verify_legal_citations(answer, contexts)

# Jurisdiction compliance

metrics['jurisdiction_relevance'] = check_jurisdiction(query, contexts)

# Precedent validity

metrics['precedent_validity'] = verify_precedents(answer, legal_database)

# Legal reasoning quality

metrics['reasoning_quality'] = evaluate_legal_reasoning(answer, legal_evaluator)

return metricsMedical RAG Evaluation

def medical_rag_evaluation(query, answer, contexts):

"""Specialized evaluation for medical RAG systems"""

metrics = {}

# Medical accuracy against authoritative sources

metrics['medical_accuracy'] = verify_medical_facts(answer, medical_knowledge_base)

# Safety assessment

metrics['safety_score'] = assess_medical_safety(answer, safety_guidelines)

# Currency of information

metrics['information_currency'] = check_medical_currency(contexts)

# Appropriate disclaimers

metrics['disclaimer_presence'] = check_medical_disclaimers(answer)

return metricsEvaluation Tool Integration

RAGAS Integration

from ragas import evaluate

from ragas.metrics import faithfulness, answer_relevancy, context_precision, context_recall

from datasets import Dataset

def comprehensive_ragas_evaluation(evaluation_data):

"""Full RAGAS evaluation pipeline"""

# Convert to RAGAS format

dataset = Dataset.from_dict({

'question': [item['query'] for item in evaluation_data],

'answer': [item['answer'] for item in evaluation_data],

'contexts': [item['contexts'] for item in evaluation_data],

'ground_truths': [item.get('ground_truth', '') for item in evaluation_data]

})

# Run evaluation

result = evaluate(

dataset,

metrics=[

faithfulness,

answer_relevancy,

context_precision,

context_recall

],

llm=your_evaluator_llm,

embeddings=your_embeddings_model

)

return resultCustomGPT Integration

For teams using CustomGPT’s RAG platform, evaluation can be integrated directly:

# CustomGPT evaluation integration example

import customgpt_client

def evaluate_customgpt_responses(test_queries):

"""Evaluate CustomGPT RAG responses"""

client = customgpt_client.Client(api_key="your-key")

evaluator = ProductionRAGEvaluator(config)

results = []

for query in test_queries:

# Get RAG response from CustomGPT

response = client.query(

project_id="your-project",

query=query

)

# Evaluate using standard metrics

evaluation = evaluator.evaluate_query(

query=query,

contexts=response.contexts,

answer=response.answer

)

results.append(evaluation)

return resultsFrequently Asked Questions

Which metrics should I prioritize for a customer-facing RAG application?

Focus on Faithfulness (prevents hallucinations) and Answer Relevance (ensures responses address user queries). Context metrics are important during development but less critical for user-facing evaluation. Add Response Time as a non-functional metric since user satisfaction drops significantly above 3-5 seconds.

How often should I evaluate my production RAG system?

Implement continuous evaluation on 5-10% of production queries for early issue detection. Run comprehensive evaluations weekly with 200-500 representative queries. Conduct deeper human evaluation monthly or after significant system changes. High-stakes applications may need daily evaluation.

Can I rely entirely on automated metrics, or do I need human evaluation?

Automated metrics provide excellent coverage for development and monitoring, but human evaluation is essential for validation. LLM-as-a-judge approaches achieve 85-90% agreement with human evaluators on most metrics. Use human evaluation to validate automated metrics and catch edge cases that automated systems miss.

How do I handle disagreement between different evaluation LLMs?

Use ensemble evaluation with multiple LLMs (e.g., GPT-4, Claude, open-source models) and take the median score. Track which evaluators tend to be more/less strict and adjust thresholds accordingly. For critical decisions, fall back to human evaluation when automated scores diverge significantly (>0.3 difference).

What’s a good baseline for each metric?

Production targets: Faithfulness >0.85, Answer Relevance >0.8, Context Precision >0.7. Minimum acceptable: Faithfulness >0.7, Answer Relevance >0.6, Context Precision >0.5. These vary significantly by domain—medical/legal applications need higher faithfulness scores (>0.9).

How do I evaluate RAG systems without ground truth answers?

Use reference-free metrics like Faithfulness (answer grounded in context), Answer Relevance (generated questions match original), and Context Relevance (LLM judges context utility). RAGAS provides implementations of all these metrics. While not perfect, they correlate well with human judgment and enable evaluation at scale.

The key to successful RAG evaluation is combining multiple perspectives: automated metrics for coverage, human evaluation for validation, and continuous monitoring for production reliability. Start with the RAG Triad metrics and expand based on your specific domain requirements and quality thresholds.

For more RAG API related information:

- CustomGPT.ai’s open-source UI starter kit (custom chat screens, embeddable chat window and floating chatbot on website) with 9 social AI integration bots and its related setup tutorials.

- Find our API sample usage code snippets here.

- Our RAG API’s Postman hosted collection – test the APIs on postman with just 1 click.

- Our Developer API documentation.

- API explainer videos on YouTube and a dev focused playlist.

- Join our bi-weekly developer office hours and our past recordings of the Dev Office Hours.

P.s – Our API endpoints are OpenAI compatible, just replace the API key and endpoint and any OpenAI compatible project works with your RAG data. Find more here.

Wanna try to do something with our Hosted MCPs? Check out the docs for the same.

Priyansh is Developer Relations Advocate who loves technology, writer about them, creates deeply researched content about them.