TLDR

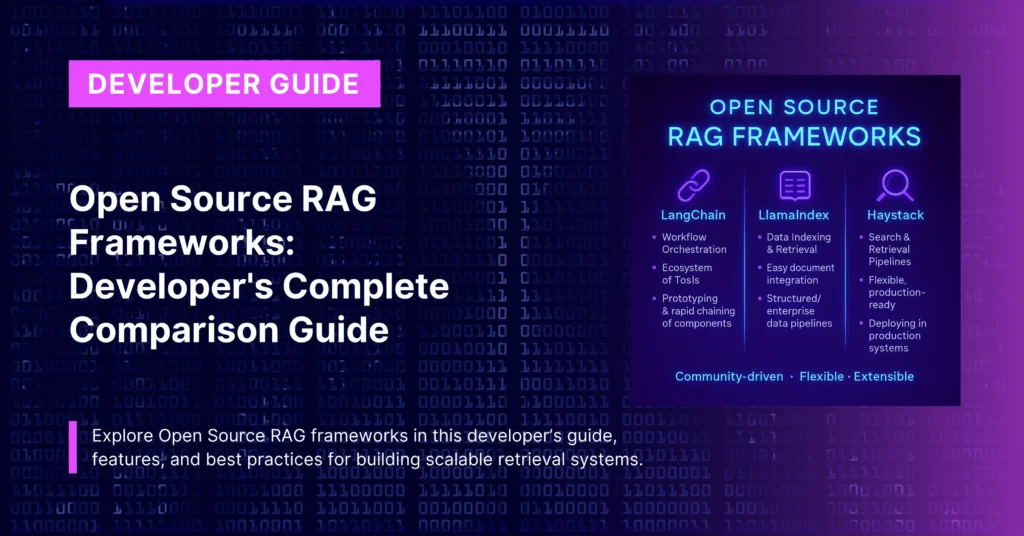

- Open source RAG frameworks like LangChain, LlamaIndex, and Haystack offer different approaches to building RAG systems. LangChain excels at workflow orchestration, LlamaIndex specializes in data indexing, while Haystack provides production-ready components.

- CustomGPT.ai’s free MIT-licensed starter kit combines the best practices from these frameworks with managed infrastructure, eliminating complexity while maintaining flexibility.

The open source ecosystem for RAG development has exploded with dozens of frameworks, libraries, and tools.

Each promises to make building RAG systems easier, but they take different approaches and excel in different areas. Choosing the wrong framework can lead to months of wasted development time and systems that don’t meet your needs.

This comprehensive guide compares the major open source RAG frameworks, explains their strengths and weaknesses, and helps you choose the right tool for your specific requirements.

We’ll also explore how managed solutions and starter kits can accelerate development while maintaining the flexibility of open source approaches.

Why Open Source RAG Frameworks Matter

The Challenge of Building RAG from Scratch

Building a RAG system without frameworks means implementing:

- Document Processing: Parsing different file formats, handling encoding issues, extracting text and metadata

- Embedding Generation: Managing multiple embedding models, handling batch processing, optimizing for cost and performance

- Vector Operations: Implementing similarity search, managing indices, handling updates and deletions

- LLM Integration: Connecting to various language models, managing prompts, handling streaming responses

- Pipeline Orchestration: Coordinating all these components, handling errors, managing state

This represents months of development work before you can even start addressing your specific business requirements.

How Frameworks Accelerate Development

Open source RAG frameworks provide:

- Pre-built Components: Ready-to-use modules for common RAG operations

- Integration Libraries: Connectors for popular databases, APIs, and services

- Workflow Orchestration: Tools for chaining components together into complete systems

- Best Practice Implementations: Proven patterns for common RAG challenges

- Community Support: Documentation, tutorials, and community-contributed improvements

Prerequisites for Framework Evaluation

Technical Skills You’ll Need

Python Proficiency: Most RAG frameworks are Python-based and require solid Python skills including:

- Object-oriented programming concepts

- Asynchronous programming (async/await)

- Package management and virtual environments

- Basic understanding of machine learning libraries

API Integration Experience:

- REST API consumption and error handling

- Authentication and authorization patterns

- Rate limiting and retry logic

- JSON/XML data processing

Database Knowledge:

- Basic SQL and NoSQL database concepts

- Understanding of indexing and query optimization

- Experience with database connections and connection pooling

Machine Learning Fundamentals:

- Vector embeddings and similarity concepts

- Basic understanding of transformer models

- Familiarity with model serving and inference

Framework Evaluation Criteria

- Ease of Use: How quickly can you get a basic RAG system working?

- Flexibility: Can you customize the framework for specific requirements?

- Performance: How well does it handle production loads and large document collections?

- Community Support: Is there good documentation, active development, and community resources?

- Production Readiness: Does it include monitoring, error handling, and scalability features?

- Ecosystem Integration: How well does it work with other tools and services you need?

Major Open Source RAG Frameworks

LangChain: The Swiss Army Knife

What It Is: LangChain is a framework for building applications with large language models, with extensive RAG capabilities built in.

Core Strengths:

Workflow Orchestration: Excellent at chaining together multiple steps in complex RAG workflows. You can easily create pipelines that retrieve documents, process them, generate responses, and apply post-processing.

Broad Integration Support: Connects to virtually every popular service – from vector databases like Pinecone and Weaviate to LLM providers like OpenAI and Anthropic.

Agent Capabilities: Strong support for building agents that can use tools, make decisions, and handle complex multi-step reasoning tasks.

Community and Ecosystem: Largest community, most tutorials, and extensive ecosystem of add-ons and extensions.

When LangChain Works Well:

- Building complex, multi-step RAG workflows

- Experimenting with different approaches and techniques

- Integrating with many different services and APIs

- Building conversational agents with RAG capabilities

- Rapid prototyping and experimentation

LangChain Limitations:

Complexity Overhead: The flexibility comes with complexity. Simple tasks often require understanding many concepts and abstractions.

Performance Concerns: The abstraction layers can introduce latency. Optimizing for production often requires working around the framework.

Version Stability: Rapid development means breaking changes are common, making upgrades challenging.

Memory Usage: Can be resource-intensive for large-scale operations.

Best Use Cases for LangChain:

- Research and development projects

- Complex agent-based systems

- Applications requiring integration with many different services

- Teams with strong Python expertise who can handle complexity

LlamaIndex: The Data-Focused Framework

What It Is: LlamaIndex (formerly GPT Index) is specifically designed for building RAG applications with a focus on data ingestion and indexing.

Core Strengths:

Data Ingestion Excellence: Outstanding support for loading and processing various data sources – documents, databases, APIs, and structured data.

Indexing Strategies: Multiple indexing approaches optimized for different use cases – vector indices, tree indices, knowledge graphs, and more.

Query Processing: Sophisticated query engines that can handle complex questions requiring multiple retrieval steps.

Structured Data Support: Better than most frameworks at working with structured data like databases, spreadsheets, and APIs.

When LlamaIndex Excels:

- Building systems with diverse data sources

- Applications requiring sophisticated indexing strategies

- Projects involving structured data and databases

- Use cases where data organization and retrieval are primary concerns

LlamaIndex Limitations:

- Learning Curve: The data-focused approach requires understanding various indexing concepts and strategies.

- Less Workflow Flexibility: More opinionated about how RAG should work, which can be limiting for custom workflows.

- Smaller Ecosystem: Fewer community resources and third-party integrations compared to LangChain.

- Agent Capabilities: Less sophisticated agent and tool-use capabilities compared to other frameworks.

Best Use Cases for LlamaIndex:

- Enterprise knowledge management systems

- Applications with complex data requirements

- Systems that need to work with structured and unstructured data

- Organizations prioritizing data organization and retrieval accuracy

Haystack: The Production-Ready Framework

What It Is: Haystack is designed specifically for building production NLP applications, with strong RAG capabilities and enterprise focus.

Core Strengths:

Production Readiness: Built with production use cases in mind, including monitoring, evaluation, and deployment tools.

Pipeline Architecture: Clean, modular pipeline design that’s easy to understand, test, and maintain.

Evaluation Tools: Built-in capabilities for evaluating and improving RAG system performance.

Enterprise Features: Security, monitoring, and scalability features designed for business use.

When Haystack Shines:

- Building production systems that need reliability and monitoring

- Organizations with enterprise requirements

- Teams that prioritize code maintainability and testing

- Applications requiring built-in evaluation and improvement tools

Haystack Limitations:

- Learning Investment: Requires understanding the pipeline architecture and component model.

- Less Experimentation Support: More structured approach can be limiting for research and experimentation.

- Smaller Community: Less community content and fewer tutorials compared to LangChain.

- Integration Complexity: Adding custom components requires more framework knowledge.

Best Use Cases for Haystack:

- Production enterprise applications

- Systems requiring comprehensive monitoring and evaluation

- Organizations with strict reliability and maintainability requirements

- Teams that prefer structured, testable architectures

Emerging Frameworks Worth Watching

DSPy: Stanford’s framework for programming language models with automatic optimization. Interesting for teams that want to optimize prompts and workflows automatically.

Semantic Kernel: Microsoft’s SDK with strong enterprise integration capabilities and multi-language support.

Chainlit: Focused on building conversational RAG interfaces with excellent UI components.

Framework Comparison Matrix

Development Speed and Ease of Use

- Fastest to Start: LangChain (extensive tutorials, community examples)

- Best Documentation: Haystack (comprehensive, well-organized)

- Steepest Learning Curve: LlamaIndex (requires understanding indexing concepts)

- Most Beginner-Friendly: LangChain (lots of examples and tutorials)

Performance and Scalability

- Best for Large-Scale Data: LlamaIndex (optimized indexing strategies)

- Most Production-Ready: Haystack (built-in monitoring and optimization)

- Most Flexible Performance Tuning: Custom implementations with frameworks as components

- Best for Real-Time Applications: Depends on specific implementation, but Haystack has advantages for production optimization

Integration and Ecosystem

Most Integrations: LangChain (connects to everything)

- Best Database Support: LlamaIndex (designed for diverse data sources)

- Best Enterprise Integrations: Haystack (enterprise-focused features)

- Most Active Development: LangChain (rapid feature development)

Practical Framework Selection Guide

Decision Tree for Framework Choice

Choose LangChain if:

- You’re building complex, multi-step workflows

- You need to integrate with many different services

- You’re comfortable with rapid framework changes

- You want maximum flexibility and experimentation capability

- You have experienced Python developers

Choose LlamaIndex if:

- Your primary challenge is organizing and indexing diverse data

- You work with both structured and unstructured data

- Data retrieval accuracy is your top priority

- You prefer frameworks with clear, data-focused abstractions

- You need sophisticated query processing capabilities

Choose Haystack if:

- You’re building production systems for enterprise use

- Reliability, monitoring, and evaluation are priorities

- You prefer structured, maintainable code architectures

- You need built-in tools for system improvement

- You have requirements for security and compliance

Consider Managed Solutions if:

- You want to focus on business logic rather than infrastructure

- You need enterprise-grade reliability and security

- You have limited ML/AI expertise on your team

- You want faster time-to-market

- You prefer predictable costs and support

Hybrid Approaches

Many successful production systems combine approaches:

Framework + Managed Services: Use open source frameworks for custom logic while leveraging managed services for complex operations like embedding generation and vector search.

Multi-Framework Architecture: Use different frameworks for different components – for example, LlamaIndex for data processing and LangChain for agent workflows.

Framework + Custom Components: Start with a framework foundation and build custom components for specific requirements.

The CustomGPT.ai Alternative

Managed Solution Benefits

While open source frameworks provide flexibility, they also require significant development and operational overhead. CustomGPT.ai provides enterprise-grade RAG capabilities without the complexity:

No Infrastructure Management: Focus on your application instead of managing vector databases, embedding models, and scaling infrastructure.

Production-Ready from Day One: Enterprise security, reliability, and monitoring built-in.

Automatic Optimization: Benefit from continuous improvements without framework upgrades or migration work.

Faster Development: Get working RAG systems in hours instead of weeks or months.

Predictable Costs: Usage-based pricing instead of unpredictable infrastructure and development costs.

The MIT-Licensed Starter Kit Approach

The CustomGPT starter kit combines the best of both worlds:

Open Source Flexibility: Complete source code access with MIT license – use, modify, and distribute freely.

Best Practice Implementation: Incorporates lessons learned from all major frameworks without their complexity.

Production-Ready Templates: Includes authentication, monitoring, error handling, and deployment configurations.

Managed Backend Integration: Works seamlessly with CustomGPT.ai’s managed infrastructure while allowing complete frontend customization.

No Vendor Lock-In: MIT license means you can modify and deploy however you want.

This approach gives you the flexibility of open source development with the reliability of managed infrastructure.

Implementation Examples and Patterns

Starting with Open Source Frameworks

LangChain Quick Start Example:

from langchain.document_loaders import DirectoryLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.llms import OpenAI

from langchain.chains import RetrievalQA

# Load and process documents

loader = DirectoryLoader('./documents')

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

texts = text_splitter.split_documents(documents)

# Create vector store

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(texts, embeddings)

# Set up QA chain

llm = OpenAI()

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=vectorstore.as_retriever()

)

# Ask questions

response = qa_chain.run("What is the main topic of these documents?")This simple example demonstrates LangChain’s approach but doesn’t include production concerns like error handling, monitoring, authentication, or scaling.

Production Considerations

Error Handling: Production systems need comprehensive error handling for document processing failures, API timeouts, and model failures.

Monitoring: Track performance metrics, accuracy, costs, and user satisfaction.

Security: Implement proper authentication, authorization, and data protection.

Scaling: Handle concurrent users, large document collections, and varying loads.

Cost Management: Monitor and optimize costs for embeddings, vector storage, and language model usage.

Managed Solution Alternative

Using CustomGPT.ai with the starter kit eliminates most of this complexity:

// From the starter kit - handles production concerns automatically

const ragClient = new CustomGPTClient({

apiKey: process.env.CUSTOMGPT_API_KEY,

projectId: 'your-project-id'

});

// Production-ready with automatic error handling, monitoring, and scaling

const response = await ragClient.query({

question: "What is the main topic of these documents?",

context: {

userId: req.user.id,

source: 'web-interface'

}

});

res.json({

answer: response.content,

sources: response.sources,

confidence: response.confidence

});This approach provides production reliability while maintaining development simplicity.

Migration and Evolution Strategies

Framework Migration Patterns

Start Simple, Add Complexity: Begin with managed solutions or simple frameworks, then add complexity as requirements become clear.

Component-by-Component Migration: Replace framework components gradually rather than all at once.

Parallel Implementation: Run old and new systems in parallel during transitions to ensure reliability.

Data Pipeline Separation: Separate data processing from serving layers to enable independent evolution.

Long-Term Sustainability

Community Health: Choose frameworks with active, sustainable development communities.

Commercial Support: Consider frameworks with commercial support options for enterprise use.

Migration Flexibility: Avoid frameworks that make it difficult to extract your data and logic.

Standards Compliance: Prefer frameworks that follow industry standards and best practices.

Cost Analysis: Open Source vs Managed

Open Source Framework Costs

Development Time: 2-6 months for basic RAG system, 6-12 months for production-ready system.

Infrastructure Costs: Vector databases, GPU instances, storage, networking.

Operational Overhead: Monitoring, maintenance, updates, security management.

Expertise Requirements: Senior developers with ML/AI experience, DevOps engineers.

Ongoing Maintenance: Framework updates, security patches, performance optimization.

Managed Solution Costs

Development Time: Days to weeks for basic system, weeks to months for customized solution.

Service Costs: Usage-based pricing starting at $99/month for CustomGPT.ai.

Reduced Infrastructure: No need for specialized ML infrastructure or operations expertise.

Faster Time-to-Value: Start generating business value immediately rather than after months of development.

Predictable Scaling: Costs scale with usage rather than requiring upfront infrastructure investment.

Total Cost of Ownership Comparison

For most organizations, managed solutions provide better TCO when you factor in:

- Developer time and opportunity cost

- Infrastructure and operational overhead

- Risk of project delays and technical debt

- Ongoing maintenance and security requirements

Making the Right Choice for Your Team

Team Capability Assessment

Strong ML/AI Team: Open source frameworks give you maximum control and customization.

General Development Team: Managed solutions or starter kits provide better success probability.

Limited Resources: Start with managed solutions and consider custom development later.

Enterprise Requirements: Evaluate both approaches based on specific compliance and security needs.

Project Requirements Analysis

Unique Requirements: Complex custom logic may require framework flexibility.

Standard Use Cases: Managed solutions often provide faster, more reliable results.

Integration Complexity: Consider which approach better handles your specific integration needs.

Performance Requirements: Evaluate whether custom optimization is necessary or if managed performance is sufficient.

Risk Assessment

Technical Risk: Open source frameworks require more technical expertise and carry implementation risk.

Vendor Risk: Managed solutions involve vendor dependency but often provide better reliability.

Timeline Risk: Custom development typically takes longer and is harder to estimate accurately.

Maintenance Risk: Open source requires ongoing maintenance expertise, managed solutions handle this automatically.

FAQ

Should I use an open source framework or a managed solution like CustomGPT.ai?

Depends on your team’s expertise, timeline, and requirements. Managed solutions are often better for standard use cases and teams without extensive ML expertise. Open source frameworks provide more control for teams with specific requirements and strong technical capabilities.

Can I switch between frameworks later if my needs change?

Yes, but it requires significant effort. Design your system with migration in mind by separating data processing from serving layers and avoiding framework-specific features in your core business logic.

Which framework has the best performance?

Performance depends more on your implementation choices than the framework. All major frameworks can achieve good performance with proper optimization. Managed solutions like CustomGPT.ai often provide better out-of-the-box performance.

How do I evaluate framework quality and community health?

Look at GitHub activity, documentation quality, response times for issues, and the diversity of contributors. Check if there’s commercial backing or clear sustainability plans.

What’s the learning curve like for each framework?

LangChain has the most tutorials but also the most complexity. LlamaIndex requires understanding indexing concepts. Haystack has excellent documentation but is more structured. Managed solutions have the shortest learning curve.

Can I use multiple frameworks together?

Yes, many production systems use different frameworks for different components. However, this increases complexity and maintenance overhead.

How do I handle framework updates and breaking changes?

Pin specific versions in production, test updates thoroughly in staging environments, and have rollback plans. Consider managed solutions if you want to avoid this complexity.

Ready to choose your RAG framework? Start with CustomGPT.ai for managed simplicity, or explore the free MIT-licensed starter kit that combines the best of open source flexibility with production reliability.

For more RAG API related information:

- CustomGPT.ai’s open-source UI starter kit (custom chat screens, embeddable chat window and floating chatbot on website) with 9 social AI integration bots and its related setup tutorials.

- Find our API sample usage code snippets here.

- Our RAG API’s Postman hosted collection – test the APIs on postman with just 1 click.

- Our Developer API documentation.

- API explainer videos on YouTube and a dev focused playlist.

- Join our bi-weekly developer office hours and our past recordings of the Dev Office Hours.

P.s – Our API endpoints are OpenAI compatible, just replace the API key and endpoint and any OpenAI compatible project works with your RAG data. Find more here.

Wanna try to do something with our Hosted MCPs? Check out the docs for the same.

Priyansh is Developer Relations Advocate who loves technology, writer about them, creates deeply researched content about them.