AI compliance for agencies has become a defining challenge as artificial intelligence adoption accelerates faster than regulatory clarity.

Make Money With AI

Join our Partner Programs!

Boost your reputation, drive revenue and grow your business with CustomGPT.ai.

Agencies navigating this shift must balance innovation with responsibility to protect clients, data, and long-term credibility.

For agencies and consultants, compliance is no longer a backend concern but a core part of strategic advisory services.

Understanding the AI compliance landscape empowers you to reduce risk, guide clients with confidence, and position responsible AI use as a measurable business advantage.

Understanding the Legal and Ethical Foundations of AI Use

As artificial intelligence becomes embedded in everyday business operations, agencies and consultants must understand the legal and ethical frameworks shaping its use.

These foundations help ensure AI systems are deployed responsibly while protecting clients, end users, and brand reputation. This section outlines the core legal and ethical principles guiding AI adoption, setting the stage for compliant, transparent, and trustworthy AI practices.

Mastering these basics is essential for anyone advising clients on AI-driven strategies.

Regulatory Landscape Affecting AI

AI regulations are evolving rapidly, with governments focusing on accountability, risk management, and user protection. Agencies must stay informed about global and regional data privacy regulations to avoid unintended compliance gaps.

Key regulatory considerations agencies should monitor include:

- Data protection laws governing collection, storage, and processing

- Sector-specific rules impacting healthcare, finance, and marketing

- Emerging AI governance frameworks and enforcement trends

Keeping regulatory awareness up to date allows agencies to proactively guide clients rather than react to violations after the fact.

Ethical AI Principles Agencies Must Follow

Beyond legal requirements, ethical AI focuses on fairness, accountability, and human oversight. These principles help agencies align AI solutions with societal expectations and client values.

Core ethical AI principles to apply in practice include:

- Ensuring AI systems do not discriminate or reinforce bias

- Maintaining human review in high-impact AI decisions

- Designing AI with user well-being and transparency in mind

Embedding ethical AI principles early reduces reputational risk and strengthens long-term trust with clients.

Data Privacy and Consent Responsibilities

AI systems rely heavily on data, making privacy and consent central to compliance. Agencies must ensure data usage aligns with user expectations and legal consent standards.

Essential data privacy responsibilities include:

- Collecting only necessary data for defined AI use cases

- Securing explicit consent where required by regulation

- Implementing safeguards for data storage and access

Strong data privacy practices not only reduce legal exposure but also enhance credibility in AI-powered offerings.

Transparency and Accountability in AI Systems

Transparency is critical for explaining how AI systems make decisions, especially in client-facing or high-risk applications. Agencies should be prepared to document and justify AI behavior clearly.

Best practices for AI transparency and accountability include:

- Clearly communicating AI involvement to users and clients

- Documenting data sources, training methods, and limitations

- Establishing accountability for AI outcomes and errors

Transparent AI systems are easier to defend, easier to trust, and far more sustainable for long-term agency growth.

Managing Data Privacy and Security in AI-Driven Workflows

Data privacy is one of the most critical risk areas when deploying AI, especially for agencies handling client and customer information. Missteps in data handling can quickly lead to legal penalties, loss of trust, and damaged client relationships.

For consultants and agencies, strong data privacy in AI systems is not just about compliance—it’s about building confidence that AI solutions are safe, controlled, and aligned with regulatory expectations.

This section focuses on how to manage data responsibly throughout the AI lifecycle.

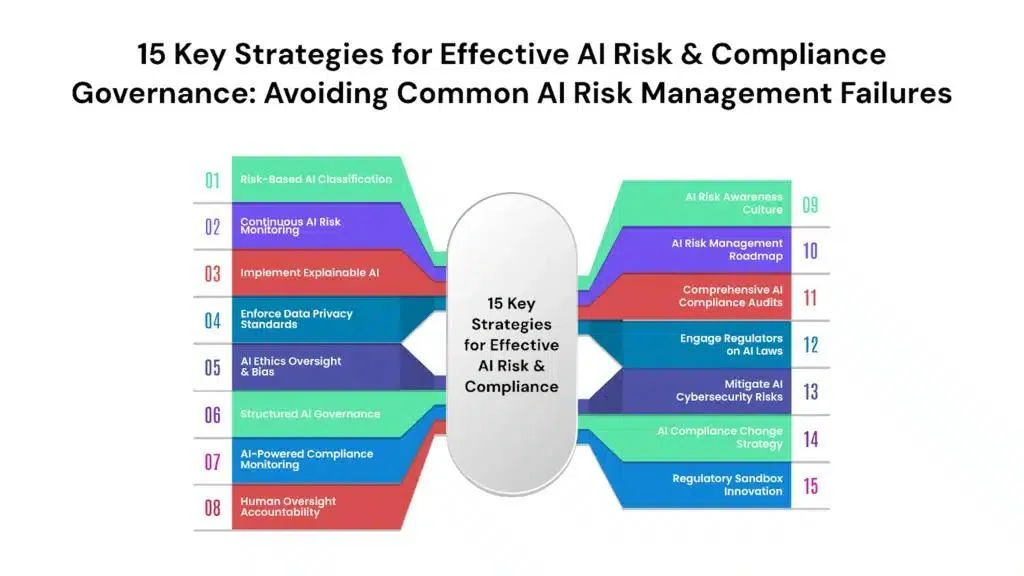

Image source: v-comply.com

Identifying High-Risk Data in AI Projects

Not all data carries the same level of risk, and agencies must learn to classify data before using it in AI systems. Personal, sensitive, or proprietary data requires heightened protection and stricter controls.

High-risk data categories agencies should flag early include:

- Personally identifiable information (PII) and customer records

- Financial, health, or location-based data

- Client-owned or confidential business data

Recognizing high-risk data upfront allows agencies to design AI workflows that minimize exposure and ensure compliance.

Consent, Data Collection, and Usage Limits

AI compliance depends heavily on how data is collected and whether users have given proper consent. Agencies must ensure AI tools only use data for clearly defined and approved purposes.

Best practices for consent-driven data usage include:

- Clearly documenting how and why data will be used by AI

- Avoiding secondary data use beyond the original consent scope

- Regularly reviewing consent policies as AI use cases evolve

Clear consent and usage boundaries reduce regulatory risk while reinforcing ethical AI standards.

Securing Data Across AI Pipelines

AI systems often integrate multiple AI tools, platforms, and vendors, increasing security complexity. Agencies must ensure data remains protected across every stage of the AI pipeline.

Key AI data security measures include:

- Encrypting data at rest and in transit

- Restricting access based on roles and responsibilities

- Vetting third-party AI vendors for security compliance

Strong security controls protect both agencies and clients from breaches and compliance violations.

Data Retention and Deletion Policies

Keeping data longer than necessary increases compliance risk without adding value. Agencies should define clear data retention and deletion rules for AI-related data.

| Data Type | Retention Purpose | Recommended Action |

| Training data | Model performance | Review and anonymize regularly |

| User inputs | Service delivery | Delete after defined usage period |

| Logs and outputs | Auditing and troubleshooting | Retain only as legally required |

Well-defined retention policies help agencies demonstrate responsible data governance while limiting long-term exposure.

Addressing Bias and Fairness in AI Systems

Bias in AI can quietly undermine trust, harm users, and expose agencies to serious legal and reputational risks. As AI models learn from historical data, they may unintentionally reinforce existing inequalities or skewed outcomes.

For agencies and consultants, addressing bias is a core component of ethical AI and responsible AI governance. This section explores how to identify, reduce, and manage bias to ensure fair and defensible AI deployments.

Common Sources of AI Bias

AI bias often originates long before a model is deployed, starting with the data and assumptions used during development. Agencies must understand where bias enters the system to effectively mitigate it.

Primary sources of AI bias include:

- Historical datasets that reflect social or systemic inequalities

- Incomplete or non-representative training data

- Human assumptions embedded in model design

Identifying these sources early helps agencies take corrective action before AI systems reach users.

Evaluating AI Outputs for Fairness

Fairness cannot be assumed—it must be tested continuously as AI systems evolve. Agencies should regularly assess AI outputs to detect patterns that disadvantage specific groups.

Fairness evaluation practices include:

- Auditing AI decisions across demographic segments

- Comparing outcomes against predefined fairness benchmarks

- Monitoring changes in behavior after model updates

Ongoing evaluation ensures AI systems remain aligned with ethical and compliance expectations over time.

Mitigation Strategies for Reducing Bias

Reducing bias requires both technical adjustments and governance oversight. Agencies should combine model-level interventions with operational controls.

Effective bias mitigation strategies include:

- Diversifying training datasets and input sources

- Applying bias-detection and correction techniques

- Introducing human review for high-impact decisions

A layered mitigation approach strengthens AI integrity while reducing compliance and reputational risks.

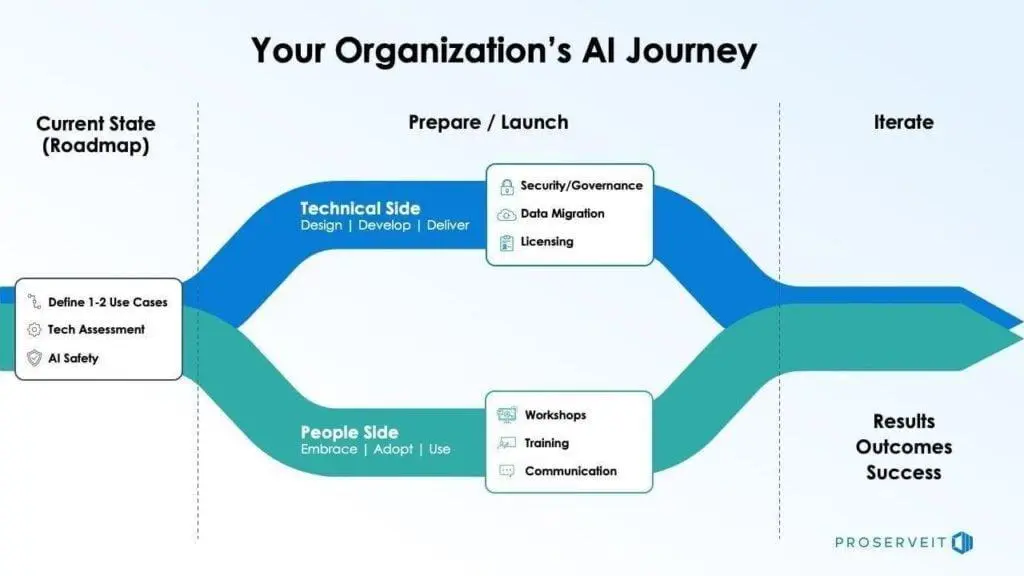

Image source: proserveit.com

Accountability for Biased AI Outcomes

When bias occurs, agencies must be prepared to respond transparently and responsibly. Clear accountability frameworks help manage incidents without escalating client or regulatory concerns.

| Responsibility Area | Assigned Role | Response Action |

| Bias detection | AI governance lead | Trigger review and analysis |

| Client communication | Account manager | Explain impact and remediation |

| System correction | Technical team | Adjust model or data inputs |

Defined accountability ensures agencies can act quickly and credibly when bias-related issues arise.

Ensuring Transparency and Explainability in AI Solutions

Transparency is essential for building trust in AI, especially when systems influence decisions that affect customers, employees, or business outcomes. Agencies that cannot explain how AI works risk losing credibility with both clients and regulators.

For consultants, explainable AI supports stronger advisory relationships by making AI decisions understandable, defensible, and aligned with ethical AI standards. This section focuses on practical ways agencies can embed transparency into AI-driven services.

Communicating AI Use to Clients and End Users

Clear communication helps set expectations about what AI does and where human judgment still applies. Agencies should avoid treating AI as a “black box” in client-facing solutions.

Effective AI communication practices include:

- Disclosing when and how AI is used in workflows

- Clarifying AI limitations and potential risks

- Defining where human oversight is applied

Transparent communication reduces misunderstandings and reinforces trust in AI-enabled services.

Documenting AI Decision-Making Processes

Documentation is a critical compliance asset when questions arise about AI outcomes. Agencies should maintain clear records of how AI systems are trained, tested, and deployed.

Key documentation elements include:

- Data sources and training methodologies

- Model objectives and performance metrics

- Known limitations and risk factors

Well-maintained documentation supports audits, client inquiries, and regulatory reviews.

Explainability for High-Impact AI Use Cases

Not all AI systems require the same level of explainability, but high-impact applications demand extra scrutiny. Agencies must prioritize explainability when AI influences sensitive or irreversible decisions.

High-impact AI scenarios include:

- Automated decision-making affecting individuals

- AI-driven recommendations with legal or financial implications

- Systems operating in regulated industries

Focusing explainability where it matters most helps agencies balance innovation with accountability.

Building Trust Through Responsible AI Practices

Transparency is not a one-time effort—it’s an ongoing commitment to responsible AI use. Agencies that consistently demonstrate openness and accountability stand out in a crowded AI services market.

Trust-building AI practices include:

- Regular reviews of AI performance and outcomes

- Open discussions with clients about AI risks and safeguards

- Continuous alignment with evolving AI governance standards

Sustained transparency strengthens long-term client relationships while supporting compliant AI growth.

Image source: tuesdaystrong.com

Partner Checklist for AI Compliance and Responsible Use

For agencies and consultants, translating AI compliance principles into daily operations is where real value is created. A clear checklist helps partners consistently apply legal, ethical, and governance standards across client engagements without slowing innovation.

This final section serves as a practical reference point partners can use to validate AI readiness, reduce risk, and demonstrate responsible AI leadership. It’s designed to support repeatable, scalable compliance across projects.

Data Privacy and Governance Readiness

Strong data governance is the foundation of compliant AI delivery. Partners should confirm privacy safeguards are in place before any AI system is deployed.

Core data governance checks partners should complete include:

- Verify lawful data collection and documented user consent

- Classify and minimize high-risk or sensitive data usage

- Define clear data retention and deletion timelines

Completing these checks early prevents downstream compliance and trust issues.

Bias and Fairness Controls

Partners must actively manage bias rather than assume AI outputs are neutral. Regular evaluation ensures AI systems remain fair as data and use cases evolve.

Bias and fairness validation steps include:

- Review training data for representativeness and gaps

- Test AI outputs across different user groups

- Assign ownership for bias monitoring and remediation

Proactive bias controls protect both clients and end users from unintended harm.

Transparency and Explainability Standards

Clients and users should never be left guessing how AI influences outcomes. Transparency strengthens credibility and simplifies compliance discussions.

Transparency requirements partners should confirm include:

- Clear disclosure of AI usage in products or services

- Accessible explanations for high-impact AI decisions

- Up-to-date documentation of AI models and limitations

Consistent transparency reduces friction with clients and regulators alike.

Ongoing Compliance and Accountability

AI compliance is not a one-time task—it requires continuous oversight. AI Partners should treat compliance as an evolving operational discipline.

Ongoing compliance actions include:

- Regular reviews of AI performance and risk exposure

- Monitoring regulatory and industry standard updates

- Maintaining clear escalation paths for AI-related issues

Using this checklist consistently helps partners deliver AI solutions that are compliant, ethical, and trusted—creating long-term value for both clients and the agency.

FAQ

Q: What does AI compliance mean for agencies and consultants?

A: AI compliance refers to following legal, ethical, and regulatory requirements when using AI systems for clients. It typically covers data protection, transparency, accountability, and risk management. The exact obligations depend on jurisdiction and use case. Compliance reduces legal exposure but does not eliminate all risk.

Q: How do agencies know if their AI use involves personal data?

A: AI involves personal data if it processes information that can identify an individual directly or indirectly. This includes names, contact details, behavioral data, or online identifiers. Even anonymized data can still be personal if re-identification is possible. A data-mapping review is usually required.

Q: Why is bias a compliance issue in AI systems?

A: Bias can lead to discriminatory outcomes, which may violate anti-discrimination laws or ethical standards. It often arises from skewed training data or flawed assumptions in model design. Regular testing and oversight help reduce risk. Bias cannot be fully eliminated, only managed.

Q: What level of transparency is expected when using AI with clients?

A: Transparency generally means disclosing when AI is used and explaining its role in decision-making. For high-impact uses, agencies may need to explain data sources, logic, and limitations. The depth of explanation depends on regulatory requirements and risk level. Full explainability is not always technically possible.

Q: Can agencies rely on AI vendors to handle compliance entirely?

A: No, agencies typically remain responsible for how AI is used in their services, even when tools are third-party. Vendors may provide compliance features, but responsibility is shared. Agencies must still assess risks, document decisions, and ensure lawful use. Outsourcing tools does not outsource accountability.

Conclusion

AI compliance is no longer a secondary consideration—it’s a core responsibility for agencies and consultants working with intelligent systems.

By addressing data privacy, bias, transparency, and accountability early, partners can reduce risk while building long-term trust with clients.

If you’re looking to operationalize compliant AI across client environments without adding complexity, explore scalable AI infrastructure solutions designed specifically for service providers.

Learn how purpose-built platforms can support responsible AI deployment at scale.

Navigate AI Compliance With Confidence.

Apply AI compliance for agencies to manage risk, governance, and regulatory requirements.

Trusted by thousands of organizations worldwide