Enterprise chatbots can produce confident, incorrect answers (“hallucinations”), which creates trust, compliance, and security risk. An AI governance checklist helps you define what the bot is allowed to say, prove what it used as evidence, and continuously verify responses, especially in regulated or high-stakes workflows.

Start CustomGPT’s 7-day free trial to verify chatbot answers.

TL;DR

AI governance checklist for enterprise chatbots is a set of controls that defines allowed sources and behavior, enforces grounded retrieval, verifies each answer (ideally at claim level), and logs decisions for audits, so the bot stays accurate, defensible, and safe to deploy in real workflows.

- Treat governance as an end-to-end control system: scope → retrieval → verification/compliance → monitoring → reviews.

- Hallucination mitigation best practices focus on grounding, evaluation, and guardrails, but you still need claim-level verification for high-risk answers.

- CustomGPT’s Verify Responses is designed to extract claims, flag what’s unsupported, and review outputs across stakeholder perspectives (legal/compliance/security/PR/exec)

Define Scope, Sources, And Answer Boundaries

Goal: Make it impossible for the chatbot to “make stuff up” outside approved knowledge.

- Define the chatbot’s job (support deflection, employee policy, product documentation, etc.).

- Define answer boundaries: what it can answer vs must refuse/escalate.

- Define approved sources (policies, KB, product docs, SOPs) and exclude everything else.

- Define citation requirements: every factual statement must be source-attributed.

- Define “high-risk topics” (legal, HR, pricing, security, compliance, medical, finance).

- Define tone and non-guarantee language (avoid “always / never / guaranteed”).

- Define stakeholder requirements (legal review rules, security constraints, PR constraints).

Why this matters: governance frameworks treat risk as contextual, your controls must match the domain and impact.

Enforce Retrieval Quality And Source Freshness

Goal: Reduce hallucinations caused by retrieval gaps, stale docs, or ambiguous context.

- Document hygiene: versioning, ownership, last reviewed date, and canonical sources.

- Chunking & structure: keep procedures and policies cleanly sectioned.

- Freshness controls: retire outdated pages; refresh indexed sources on a schedule.

- Coverage checks: test common intents; confirm sources exist for top queries.

- Grounding guardrails: use “answer from sources” behavior; refuse when evidence is missing.

- Evaluation loop: run test sets for top questions; track regressions after updates.

- User experience: make citations visible so users can verify quickly (and learn what the bot knows).

CustomGPT supports citations (including inline citations), so end users can see where statements came from and click through to sources.

It also provides controls around how sources/citations are shown and how the agent uses knowledge base awareness.

Related Q&As

- Introducing Inline Citations (trust + transparency)

- Control Agent’s General Knowledge Base Awareness (tighten scope)

- How Are Citations And Links Tracked (measure what users click)

- Enable OpenGraph Citations (visual source previews)

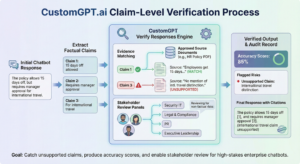

Add Claim-Level Verification For High-Risk Answers

Goal: Catch answers that are partly correct but contain one risky or unsupported claim.

Most hallucination guidance emphasizes grounding and guardrails (good), but high-stakes enterprise chatbots need verification that operates at the claim level, not just “overall answer seems grounded.”

What “Claim-Level Verification” Looks Like

- Extract factual claims from the response

- Match each claim to supporting evidence in approved sources

- Flag unsupported claims

- Produce an accuracy score and an audit record

CustomGPT’s Verify Responses is positioned exactly this way: it extracts claims, checks them against your source documents, flags unsupported claims, and provides an accuracy score.

It also adds a stakeholder review (“Trust Building”) across multiple perspectives (End User, Security IT, Risk Compliance, Legal Compliance, PR, Executive Leadership) to surface risks that aren’t purely factual errors.

When To Run Verification

- Always-on for high-risk chatbots (policy, compliance, finance, HR).

- On-demand (spot checks) for lower-risk chatbots or during review cycles.

- Pre-launch and after major content updates (regression gates).

Cost note (so you can plan): Verify Responses is listed as an action with agentic cost 4 (i.e., it can increase query cost when invoked).

Try CustomGPT, Verify Responses with a 7-day free trial today.

Run Security, Privacy, And Compliance Checks

Goal: Reduce exposure from prompt injection, sensitive data leakage, and policy violations.

- Prompt injection defense: treat user input as untrusted; prevent “ignore previous instructions” overrides. OWASP highlights prompt injection as a key LLM risk category.

- PII handling: block sensitive outputs; restrict access to sensitive sources.

- Source controls: whitelists, permissioning, and authenticated access for internal bots.

- Compliance workflow: require citations for regulated answers; escalate when evidence is missing.

- Security posture: ensure vendor platform claims (e.g., SOC 2 / GDPR) align with your requirements.

CustomGPT’s Verify Responses messaging explicitly positions secure in-platform analysis and SOC 2 Type II / GDPR-compliant processing.

(Still do your own procurement/security review; governance checklists should always require verification of vendor controls.)

Instrument Monitoring, Audit Trails, And Escalation

Goal: Make the system observable and auditable, so you can improve it and defend it.

- Log every answer and the sources it relied on (or didn’t).

- Track what users click (citations/links) to understand intent and content gaps.

- Create escalation paths: “I don’t know” + handoff for missing coverage.

- Trend risk signals: repeated unsupported claims, top refusal categories, top misunderstood topics.

- Audit packaging: produce a record Legal/Compliance can review.

Verify Responses is explicitly framed as producing a verifiable, auditable record and enabling audit-ready documentation.

Operationalize With Roles, Review Cadence, And RACI

Goal: Keep governance alive after launch.

- RACI: content owner, security reviewer, legal reviewer, chatbot operator, approver.

- Change management: any policy update triggers re-index + regression tests.

- Review cadence: monthly for normal docs; weekly for volatile content.

- Release gates: ship only if evaluation + verification pass for high-risk topics.

- Metrics: coverage, refusal rate, citation rate, verified claim rate (where applicable).

Standards and frameworks support establishing org-level governance structures for AI systems.

Example: HR Policy Chatbot In A Regulated Enterprise

Scenario: Employees ask about leave policy, benefits eligibility, and disciplinary process.

- Scope sources to the HR handbook, policy memos, and approved FAQs.

- Require citations for all policy statements.

- For questions that could create liability (“Can we terminate for X?”), require escalation.

- Turn on Verify Responses for HR topics (always-on or during review), so unsupported claims get flagged and the response is reviewed across stakeholder lenses.

- Log answers + verification results for audits and internal review.

Expected result: fewer risky answers reaching employees, faster compliance review, and clearer evidence trails when HR updates policies.

Conclusion

A practical AI governance checklist for enterprise chatbots pairs grounded sources with verification and audit trails. If you need claim-level checks and stakeholder risk review, CustomGPT Verify Responses can help, you can try it free for 7 days.

FAQ

What Is An AI Governance Checklist For Enterprise Chatbots?

An AI governance checklist is a set of controls that defines what your chatbot can answer, which sources it can use, how it proves claims with citations, and how you monitor and audit outputs over time.

What Is Claim-Level Verification And Why Does It Matter?

Claim-level verification extracts factual claims from a response and checks each claim against approved sources. It matters because a response can be “mostly right” but still contain one unsupported statement that creates risk.

How Does CustomGPT Verify Responses Help With Governance?

Verify Responses is described as extracting claims, flagging unsupported statements, producing an accuracy score, and running a stakeholder risk review (security/legal/PR/exec) so teams can create audit-ready documentation.

What’s The Biggest Governance Risk For LLM Chatbots?

Prompt injection is a major risk class for LLM applications (especially when tools/actions or sensitive data are involved), so governance should include input handling rules and secure boundaries.