Scaling a business puts pressure on many systems, but customer support feels it first. As customer volume grows, questions multiply, edge cases appear, and urgency increases. What once felt manageable quickly becomes a bottleneck.

This is where AI support agents change the equation.

Instead of relying on linear headcount growth or forcing customers through rigid self-service flows, companies can now design AI-driven support systems that reduce ticket volume while improving customer satisfaction.

When built correctly, AI support agents don’t replace human teams—they make them dramatically more effective.

The result is a support organization that scales with the business instead of holding it back.

The Shift From Ticket Handling to Support Systems

Traditional support models are built around people responding to tickets. That model breaks under rapid growth because tickets are the most expensive output of the system.

Modern support works differently.

Support is a system designed to help customers succeed. Tickets are a signal that the system failed somewhere upstream.

AI makes it possible to redesign that system.

Instead of asking, “How do we answer tickets faster?” the better question becomes, “How do we prevent tickets from being created—and resolve the ones that remain with less effort?”

This shift is foundational. Without it, AI becomes just another chatbot layered on top of broken workflows.

What “Scaling Support” Really Means

If your company is trying to grow 3X, your support volume usually doesn’t grow 3X. It often grows faster.

Why?

- New customers are unfamiliar customers

- New marketing channels bring lower-intent users

- Increased usage creates more combinations and edge cases

- Revenue growth adds billing complexity

- Frequent product changes create confusion

So scaling support requires solving two problems at the same time:

- Reduce demand by preventing avoidable tickets

- Increase capacity by handling remaining issues faster

AI can do both—but only when it’s grounded in real support knowledge and deployed where customers actually get stuck, not bolted onto a generic chatbot widget.

The Role of AI in Modern Customer Support

AI support becomes effective when it operates as a system, not a feature.

In practice, this means intent detection, knowledge retrieval, and routing must function as one continuous loop. Every customer message updates state, and every response moves the interaction closer to resolution or escalation.

What matters most isn’t model size. It’s context.

An AI support agent must reason with three types of context at the same time:

- Interaction context: conversation history, sentiment, channel

- System context: entitlements, configurations, feature flags

- Knowledge context: documentation, policies, runbooks

When all three are present, the agent can safely resolve issues. When any are missing, the agent should switch behavior—from solving to narrowing and routing.

This is why the most effective AI support agents act as context amplifiers, not autonomous problem-solvers.

What Ticket Deflection Actually Means

Ticket deflection is often misunderstood. It’s not about suppressing tickets or pushing customers away from human help. Real ticket deflection means a customer’s problem is resolved without becoming a case—and stays resolved.

That requires proof.

Effective deflection systems track:

- the customer’s intent

- the content or action served

- whether the customer confirmed resolution

- whether they recontacted later for the same issue

Without this, deflection metrics can look healthy while unresolved demand quietly resurfaces in other channels.

High-quality deflection focuses on verified resolution, not raw volume reduction.

Designing AI Support Agents as Systems, Not Bots

An AI support agent isn’t a single prompt or chat interface. It’s a purpose-built system with a defined role and clear boundaries.

Thinking in terms of AI employees forces clarity.

An effective AI support agent has:

- a defined job (what it is and isn’t responsible for)

- access to the right data sources

- rules governing when it can resolve and when it must escalate

- measurable success metrics tied to resolution quality

This mindset moves teams from experimenting with chatbots to building reliable support infrastructure.

Core Components of an AI Support Agent

Most AI support failures happen due to misalignment between components, not weak models.

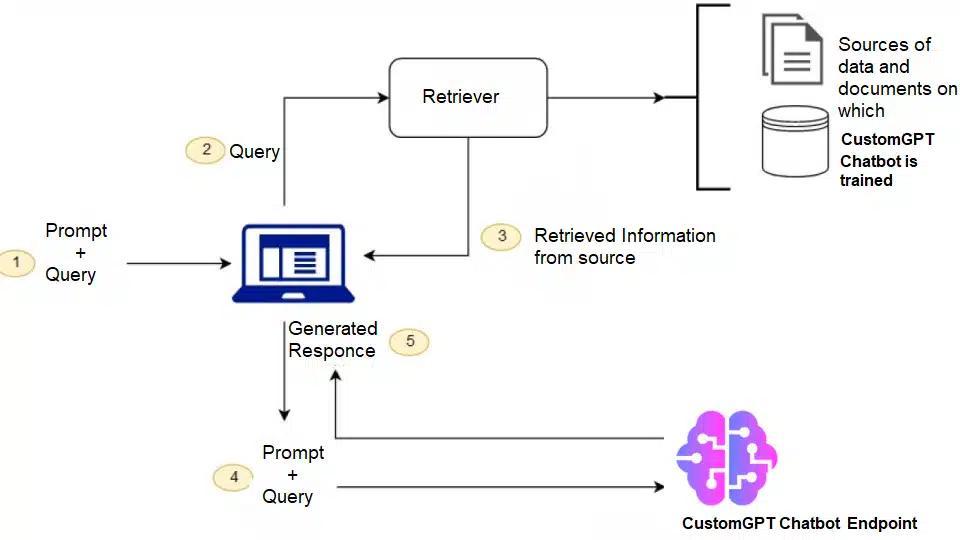

A reliable architecture separates three layers:

- Natural language understanding for intent and entity extraction

- Retrieval grounded in governed support knowledge

- Policy orchestration that controls routing and escalation

Retrieval-augmented generation is critical here. It constrains responses to approved sources and ensures answers reflect current policy and product state.

In this setup, the AI behaves less like a conversationalist and more like a planner—breaking requests into steps, pulling relevant information, and assembling responses within defined rules.

Intent Detection That Actually Works in Production

Intent detection isn’t just classification. It’s reconstructing the customer’s underlying task.

Broad labels like “billing” or “technical issue” aren’t enough to drive correct workflows. Operational intent must be specific enough to determine what should happen next.

A practical structure includes three passes:

- Surface signals: wording, entities, sentiment

- Conversation state: what’s already been attempted

- Operational intent: which workflow should trigger, under which constraints

Intent ambiguity over time is unavoidable. Requests evolve across turns, and systems must detect when intent is unstable.

High instability should trigger:

- clarifying questions

- tighter data requirements

- earlier human handoff

Production success is best measured by downstream outcomes, not offline accuracy.

Continuous Learning Through Feedback Loops

The biggest advantage of AI in support isn’t automation—it’s learning at scale. Strong systems capture three feedback channels:

- Interaction feedback from customers

- Operator feedback from agent edits and overrides

- Outcome feedback from recontacts, refunds, or churn signals

Each feeds a different layer of improvement.

Without storing full interaction traces and model decisions, teams lose the ability to safely improve. With them, every conversation becomes training data.

This is how AI support agents improve over time instead of drifting out of alignment.

Implementing AI Across the Support Journey

AI should be wired into the entire support journey, not just live chat. A useful way to structure implementation is across three stages:

Pre-contact

Reduce demand before a ticket exists.

- smarter search

- intent-aware help content

- guided flows that capture required context

In-session

Resolve issues safely during the interaction.

- retrieval grounded in live policy

- clarifying questions when context is missing

- controlled escalation when risk is high

Post-contact

Increase capacity after the interaction.

- automatic intent and outcome labeling

- structured summaries for agents

- feeding resolved cases into knowledge updates

Deflection quality emerges from the full journey, not from isolated answers.

Knowledge Management as the Real Bottleneck

Most AI support failures aren’t model problems. They’re knowledge problems.

If pricing changes, policy updates, or feature launches don’t propagate quickly, trust erodes—regardless of how good the AI sounds.

Effective systems separate knowledge into two lanes:

- Reference content for audited, slow-changing material

- Delta content for fast updates from releases and incidents

Each lane needs its own ownership, review process, and rollback controls.The goal is alignment with live operations, not static documentation.

Intelligent Routing and Safe Escalation

Routing isn’t a one-time decision. It’s a live optimization problem.

Every turn updates a routing state that blends:

- ambiguity

- operational risk

- effort required to resolve

Automation should only act within clearly defined boundaries. When risk rises, the system should shift from resolution to co-piloting or escalation. The handoff matters as much as the decision.

Passing structured context—what was detected, what was tried, and why—ensures humans can resolve issues without restarting the conversation.

How AI Support Agents Improve CSAT

Customer satisfaction improves when AI behaves like a continuity engine.

That means:

- remembering prior context

- avoiding repetition

- escalating at the right moment

- helping humans start from understanding

Well-timed escalation often improves CSAT more than over-automation. The goal isn’t maximum containment—it’s durable resolution.

Measuring What Actually Matters

Success isn’t measured inside the chat window alone.

Effective teams track:

- where the interaction started

- how it ended

- whether the customer came back

- what changed downstream

Separating deflection, containment, and resolution prevents misleading metrics and enables better tuning.

When AI support agents are measured on resolution quality—not just volume reduction—they become a long-term asset instead of a short-term cost play.

What AI Support Agent Would You Build First?

As AI support agents become part of everyday operations, they increasingly reflect how teams think about leverage.

The question isn’t whether you’ll use AI in support. It’s which AI employee you’ll design first—and how clearly you define its role.

Teams that think like builders—designing systems instead of deploying tools—will scale faster, protect CSAT, and create support organizations that grow with the business instead of against it.

FAQ

What is an AI support agent?

A purpose-built AI system designed to handle specific support functions with defined boundaries, data access, and success metrics.

Do AI support agents replace human teams?

No. They reduce repetitive work and improve context so humans can focus on complex or high-risk issues.

What data does an AI support agent need?

Customer context, product configuration, policy knowledge, historical tickets, and live operational signals.

How do you measure success?

Through verified resolution, recontact rates, sentiment change, and downstream business outcomes—not just ticket volume.