The content creation workflow most teams follow is broken. It’s an unscalable bottleneck that relies on manual brainstorming, anecdotal feedback, and basic competitor analysis. This ad-hoc process is disconnected from the real-time pulse of your audience, resulting in a content strategy that guesses what users need instead of systematically solving their stated problems.

To win, you must move from a creative, “artisanal” model to a data-driven, systematic model of content manufacturing.

TL;DR

Stop relying on manual brainstorming. Fix your Content Creation workflow by building an automated engine that turns audience demand into finished assets: scrape Reddit/YouTube questions, process them via Make/n8n, centralize in Airtable/Notion, and use a CustomGPT.ai bot to convert comments into structured VoC – , so every asset answers a validated problem.

Step 1: How do you find real audience questions?

The foundation of this system is its ability to systematically capture high-quality, raw intelligence from the digital spaces where your target audiences gather. This provides your content team with a dedicated “Voice of Customer” data stream, grounding your strategy in real-world demand.

How to Find High-Intent Questions on Reddit

You must stop viewing Reddit as a social network and start seeing it as the world’s largest collection of asynchronous focus groups. Its structure of topic-specific communities (subreddits) provides an unparalleled opportunity to observe unfiltered conversations about professional challenges, product frustrations, and unarticulated needs.

Our objective is to systematically extract high-intent signals that indicate a clear need for content.

- Discover Communities: Don’t rely on Reddit’s native search. Use Google’s superior indexing. Use search queries like “[your topic]” site:reddit.com to uncover a wide range of relevant subreddits.

- Identify Pain Points: Once you have a curated list of subreddits, search within those communities for phrases that signal a problem. A robust list includes: “how do you,” “how can I,” “I’m struggling with,” “biggest challenge,” and “tips.” This surfaces a wealth of topics rooted in genuine user need.

- Analyze the Comments: This is the most critical step. The original post is the question, but the comments section is a goldmine of secondary intelligence. It reveals solutions others have tried and failed, follow-up questions that reveal deeper layers of the problem, and alternative perspectives. Your content must address this nuance to be truly comprehensive.

How to Analyze YouTube for Content Gaps

YouTube serves as a dual-source asset. It provides a direct view into your competitors’ strategies and a high-volume channel for direct audience feedback via the comments.

- Transcript Analysis: To understand what competitors are covering, you must analyze their content structure. Use tools like youtube-transcript.io or scalable scraping platforms like Apify to get the full text transcript of any top-performing video. This transcript is a blueprint of their talking points and terminology. By analyzing the top 3-5 videos for a target keyword, you can construct a “super outline” that identifies common themes and lays the groundwork for a more comprehensive piece.

- Comment Analysis: The comments on a popular video reveal what the creator failed to address. Viewers will ask clarifying questions, point out exceptions, or request follow-up content on a related sub-topic. This feedback is a goldmine for ideating “sequel” content. Manually reading thousands of comments is impractical. Using the YouTube Data API or analysis tools, you can programmatically categorize comments, extract recurring themes, and identify the most common unanswered questions.

Step 2: How do you automate idea processing?

Once you know where to “listen,” you need an automation backbone to process the signals. This layer is the technical core of your system, responsible for ingesting, enriching, and routing data to your content hub.

Make vs n8n: which should you choose?

While tools like Zapier are user-friendly, their “per-task” pricing model becomes prohibitively expensive for a high-volume workflow that processes thousands of Reddit posts or YouTube comments. The more viable, cost-effective options for data-intensive operations are Make.com and n8n.io.

- Make.com: Provides a powerful, visual “canvas-style” interface ideal for low-code enthusiasts. Its pricing is based on “operations” (each step a module performs), which is generally more economical than Zapier for complex scenarios.

- n8n.io: This is the power-user choice for maximum control and flexibility. It is an open-source, “fair-code” platform with a node-based interface. Its key advantages are:

- Custom Code: Allows you to run custom JavaScript or Python in any workflow for sophisticated data manipulation.

- Self-Hosting: You can fully self-host it via Docker or on a VPS, giving you complete data sovereignty, control over security, and lower costs.

- Cost: Uses a “per-workflow execution” pricing model. A single run that processes 1,000 comments still counts as one execution, making it exceptionally cost-effective for high-volume data processing.

Option 1: The Visual Workflow (Using Make.com)

This blueprint uses Make.com’s visual interface for a “no-headaches” setup.

- Set Up Triggers:

- Reddit: Use the Reddit > Watch Posts in Subreddit module. Use filters to only trigger for posts containing keywords like “help,” “advice,” or “struggle.”

- YouTube: Use the YouTube > Watch Videos in a Channel module to monitor a competitor’s channel.

- Enrich the Data (YouTube): When the YouTube trigger fires, add an HTTP > Make a request module. Configure it to call a transcript service API (like youtube-transcript.io), passing the Video ID from the trigger module to get the full transcript.

- Optional AI Processing: Add the OpenAI (ChatGPT) > Create a Completion module.

- Reddit Prompt: “Summarize the following user’s problem in one sentence:”

- YouTube Prompt: “Provide a concise, bulleted summary of the key talking points from the following video transcript:”

- Route Data to Content Hub:

- Airtable: Add the Airtable > Create a Record module. Map the data (e.g., Post Title, Source URL, AI Summary) to the fields in your “Raw Signals” table.

- Notion: Add the Notion > Create a Database Item module and map the data to your “Content Pipeline” database properties.

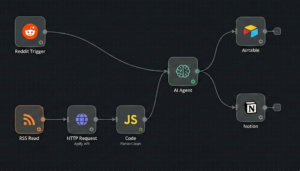

Option 2: The Power-User Workflow (Using n8n.io)

This more technical blueprint unlocks a more powerful, reliable, and customizable system.

- Set Up Triggers:

- Reddit: Use the native Reddit Trigger node to watch a subreddit.

- YouTube: Use the RSS Read node pointed at a channel’s RSS feed URL (https://www.youtube.com/feeds/videos.xml?channel_id=[ID]) to trigger on new uploads.

- Robust Data Scraping: This is how you scrape YouTube transcripts at scale. Add an HTTP Request node. Configure it to call a robust scraping platform like the Apify API and run a YouTube Transcript Scraper Actor. This method is far more reliable as it handles proxies and advanced anti-blocking techniques.

- Advanced Data Transformation: Add a Code node. Here, you can write JavaScript to parse the JSON output from Apify, clean the transcript text by removing timestamps, or even count keyword frequency.

- Advanced AI Enrichment: Go beyond summarization. Use an AI Agent node. Use a complex prompt to create an “agentic workflow”:

- Prompt: “Analyze the following YouTube comments and transcript. Identify the top 3 most frequently asked questions that were NOT answered in the video. For each question, suggest a potential blog post title.”

- Route Data to Content Hub (Securely):

- Airtable: Add the Airtable node. When configuring credentials, use the more secure Personal Access Token (PAT) method, specifying scopes like data.records:write. Map the enriched data (like the AI-suggested titles) to your “Vetted Ideas” table.

- Notion: Add the Notion node to create a new page in your database, populating the page body with the AI-generated outline.

Step 3: Set Up Your Content Hub

The Content Hub is the destination for your processed intelligence. It’s the operational core where raw signals are transformed into fully articulated content briefs, serving as the single source of truth for the entire content team.

Option 1: Using Airtable as a Relational Database

Airtable’s relational structure is ideal for a multi-stage workflow.

- Table 1: Raw Signals: This is the automated ingestion point.

- Fields: Signal Title (Text), Source URL (URL), Source Type (Select: “Reddit”, “YouTube”), Raw Text (Long text), Status (Select: “New”).

- Table 2: Vetted Ideas: Where a strategist reviews and promotes signals.

- Fields: Idea Title (Text), Core Pain Point (Long text), Link to Raw Signal (Link to record), Priority (Select: “High”, “Medium”).

- Table 3: Content Briefs: The final, operational asset.

- Fields: Content Title (Text), Link to Vetted Idea (Link), Target Audience (Rich text), Primary Keyword (Text), Key Messages (Rich text), Writer (Collaborator), Status (Select: “Briefing”, “Writing”, “Review”, “Published”), Published URL (URL).

You can then use Airtable’s native automations to manage the workflow, such as sending a Slack notification to the assigned Writer when the Status changes to “Writing.”

Option 2: Using Notion for All-in-One Briefs

Notion’s strength is combining structured database properties with free-form page content, making it perfect for comprehensive, document-style briefs.

- Database Structure: Create a single master database named “Content Pipeline.”

- Template Design: The real power comes from creating a database template named “New Content Brief.” Your automation will create new pages using this template.

- Database Properties (Metadata): These are the structured fields:

- Status (Select: “Idea”, “Briefing”, “In Progress”)

- Source URL (URL)

- Writer (Person)

- Due Date (Date)

- Primary Keyword (Text)

- Page Body (The Brief Itself): The template body is pre-formatted with all required briefing sections, ensuring no strategic steps are skipped.

- 🎯 Objective: (What is the primary goal of this piece?)

- 👤 Target Audience: (A detailed profile of the reader and their pain points)

- 🔑 Key Messages & Angle: (The core argument and our unique perspective)

- 📝 Outline / Talking Points: (This can be pre-populated by the automation’s AI summary)

- 🔍 SEO Requirements: (Keywords, meta suggestions)

- 🚀 Call to Action (CTA): (What should the reader do next?)

- Database Properties (Metadata): These are the structured fields:

This system operationalizes strategy. By making fields like Objective and Target Audience mandatory, it guarantees that foundational strategic questions are answered before writing begins.

Create an ‘Intelligence Bot’ to Analyze Your Raw Signals

Your “Raw Signals” table is a goldmine, but it will quickly fill with thousands of entries. Manually reading them to find themes becomes the new bottleneck. This is where a no-code chatbot platform like CustomGPT.ai becomes a powerful analysis layer.

- How it works: Create a new bot and train it only on your “Raw Signals” database (e.g., by connecting it directly to your Airtable or Notion table).

- What it does: This bot becomes an interactive “Voice of Customer” expert. Your content team can now ask it strategic questions in plain English:

- “What are the top 5 pain points mentioned by users on Reddit this week?”

- “Summarize the most common complaints about [competitor’s product].”

- “Find all signals related to ‘pricing’ and ‘frustration.'”

This doesn’t replace your automation; it becomes the intelligent interface on top of the data your engine collects, allowing you to spot trends without manual sifting.

Step 4: How to Optimize and Scale Your New Engine

This is not a “set it and forget it” tool; it’s a dynamic system that must be optimized.

How to “Close the Loop” with Performance Data

To unlock proven results, you must “close the loop.” Add performance metrics back into your Content Briefs table (Airtable) or Content Pipeline database (Notion).

- Fields to Add: Published URL, 30-Day Pageviews, Keyword Rank, Conversions.

By linking this performance data back to the original entry in your Raw Signals table, you can analyze which sources generate the most successful content. You might discover that one specific subreddit consistently yields high-traffic articles or that questions from a certain competitor’s YouTube channel lead to high-converting content. This feedback loop allows you to refine your “listening” strategy, focusing your resources on the highest-potential sources.

Activate Your Engine with an ‘Internal Content Bot’

Your engine’s job is to create a massive library of high-value, published content. The next challenge is making that library accessible to your entire organization. An internal-facing chatbot is the most efficient solution.

- How it works: Use a platform like CustomGPT.ai to build a second bot. This time, train it on your final, published content (e.g., by giving it your blog’s sitemap or your “Content Briefs” database).

- What it does: This bot becomes your team’s “single source of truth” for all company knowledge.

- Sales Team: “What are our three best talking points for a customer struggling with [X pain point]?”

- Marketing Team: “Pull the key messages and target audience from our ‘Automated Content Engine’ guide.”

- Support Team: “What is the step-by-step process we recommend for [Y task]?”

This system ‘closes the loop’ by not only creating content but also activating it, ensuring your high-value assets are used daily to drive business goals.

Advanced AI: From Summarization to Generation

Evolve your use of AI beyond simple summarization. Using the agentic capabilities of n8n.io or Airtable AI, you can build more advanced workflows. For example, an AI agent could be tasked with analyzing the top 10 comments from a YouTube video and automatically generating a first-draft outline for a response article.

Operational Reality: Managing Costs and Scale

As you scale, you must manage the practical realities of this system.

- Costs: Monitor your automation budget. The choice between Make.com’s per-operation model and n8n.io’s per-execution model becomes critical at high volume.

- Rate Limits: High-frequency API calls can hit rate limits. You may need to build “wait” modules or error handling into your automation logic to ensure smooth operation.

- Process: Define clear roles. A content strategist should be responsible for vetting the “Raw Signals” and promoting them to “Vetted Ideas,” while another team member may handle the final brief enrichment.

Why should I move from a creative, “artisanal” content model to an automated, systematic one

The artisanal model is an unscalable bottleneck that relies on manual brainstorming and guesswork, resulting in a strategy disconnected from the real-time pulse of your audience. To win, you must transition to a data-driven, systematic model of content manufacturing that solves your users’ stated problems.

How do I systematically find “high-intent” questions on Reddit, and what role do the comments play?

Stop viewing Reddit as a social network and see it as the largest collection of asynchronous focus groups. Use Google to discover relevant subreddits (e.g., “[your topic]” site:reddit.com), then search for phrases that signal a problem, such as “I’m struggling with” or “biggest challenge.” The comments section is the most critical step; it’s a goldmine of secondary intelligence that reveals deeper problem layers and necessary nuance your content must address.

Why are Make.com and n8n.io recommended over tools like Zapier for this high-volume workflow?

Zapier’s “per-task” pricing model becomes prohibitively expensive for processing thousands of Reddit posts or YouTube comments. Make.com offers a powerful, visual interface with an economical “per-operation” pricing model. n8n.io is the power-user choice, featuring open-source self-hosting and a “per-workflow execution” pricing model that makes it exceptionally cost-effective for high-volume data ingestion and advanced data manipulation.

Conclusion

You now have the complete blueprint for an automated content engine. This framework transforms your content creation workflow from a manual, biased, and unscalable process into a data-driven, systematic engine that manufactures measurable assets.

This system de-risks content creation by ensuring every asset is grounded in validated, documented audience demand. You are no longer guessing what to write; you are building exactly what your audience is already asking for.