Generative AI in customer support has moved from experimentation to production in customer support faster than almost any recent technology shift.

Companies are no longer asking if AI should handle support interactions, but how it should be implemented—and at what cost.

A widely cited example is Airbnb, which publicly reported achieving roughly a 15% support ticket deflection rate using AI.

That is a meaningful result by any standard: fewer tickets, faster resolutions, and lower operational load on human agents. But the result alone doesn’t tell the full story.

How that outcome was achieved—and what it implies for most businesses—matters far more than the headline number.

Understanding Generative AI and Its Role in Customer Support

Generative AI is now firmly in production across customer support organizations. What separates successful deployments from disappointing ones is no longer model quality alone, but how data, policies, and workflows are designed around the model.

In early 2024, a large enterprise insurer deployed a generative support assistant connected to its existing knowledge base and CRM systems using retrieval-augmented generation (RAG).

The model itself was not fine-tuned. Instead, it was constrained to retrieve from approved sources and operate within existing support workflows. Within weeks, inbound email volume dropped materially and response times improved without increasing headcount.

The outcome looked like automation. In practice, it was a result of governance and system design. Generative AI in support does not succeed by “answering questions” in isolation.

It succeeds by arbitrating between ticketing rules, CRM state, policy constraints, and fragmented knowledge.

Across real deployments, outcomes correlate less with model size than with institutional discipline: how often knowledge is refreshed, how clearly escalation paths are defined, and whether humans retain authority over sensitive decisions.

Generative AI’s Role in Customer Support

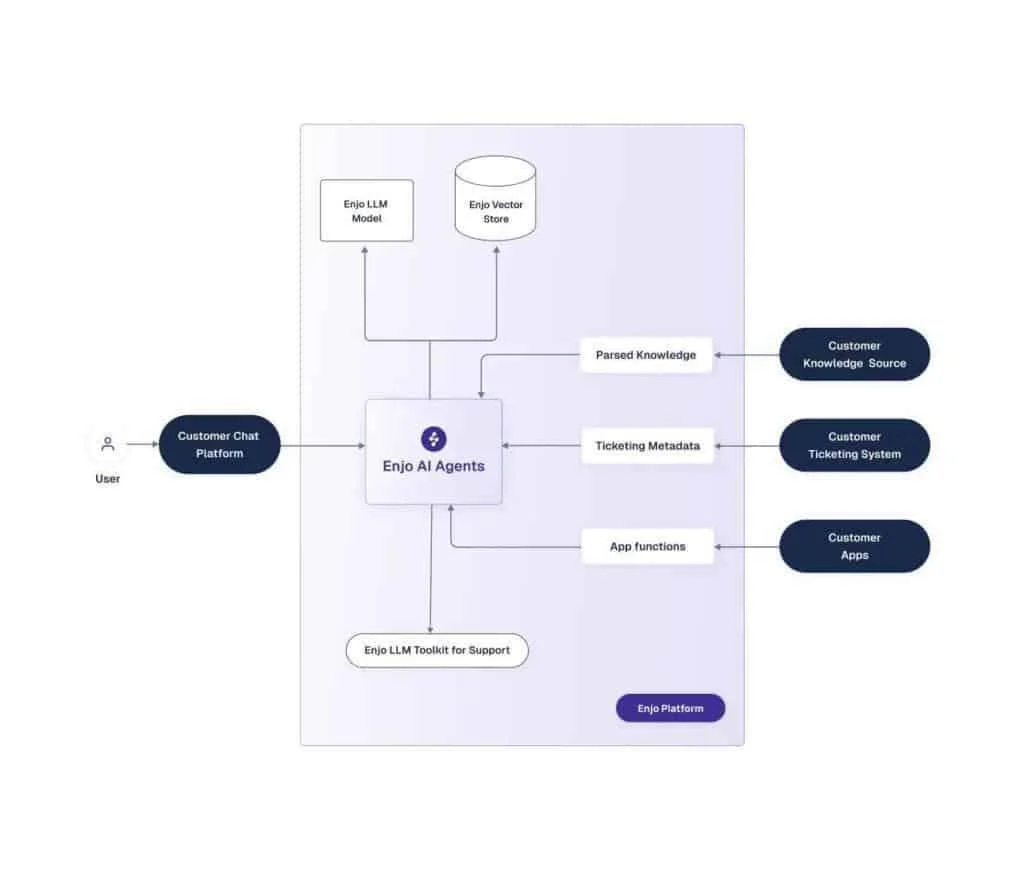

A generative model becomes reliable in support only when retrieval is treated as a routing system, not a search box. The critical behavior is deciding what information is allowed to be retrieved, from where, and under which constraints.

In production environments, naive vector search over FAQs often fails on edge cases. More mature systems use tiered retrieval: short-form FAQs, long-form documentation, and historical ticket data are queried separately and only when policy permits.

This improves precision without changing the underlying model. Strong implementations separate three concerns:

- Intent classification to determine what the user is actually trying to do

- Constraint injection based on policy, entitlement, and region

- Answer shaping that adapts tone and actionability to the channel

When these layers are collapsed into a single prompt, failures are often misattributed to “hallucinations” rather than uncontrolled context. The practical implication is that better outcomes usually come from clearer retrieval boundaries and policy logic, not from switching to a newer foundation model.

Traditional Customer Support Challenges

The most under-recognized limitation in traditional support is not ticket volume but context fragmentation during a live interaction. Agents routinely operate across multiple systems: ticketing tools, CRM records, billing systems, internal documentation, and messaging platforms.

That fragmentation matters because every missing piece of context forces an agent back into “search mode” instead of “resolution mode,” which quietly kills ticket deflection and drives average handling time up even when headcount is adequate.

The issue is structural, not individual. When conversations move across channels—chat to email, email to phone—context is often lost or partially reconstructed.

Even platforms that support omnichannel workflows are frequently implemented in ways that preserve events, not full conversational episodes. If context is not centralized, AI inherits the same fragmentation.

Without a unified, queryable view of customer state and history, generative systems cannot meaningfully deflect tickets—they simply shift effort elsewhere.

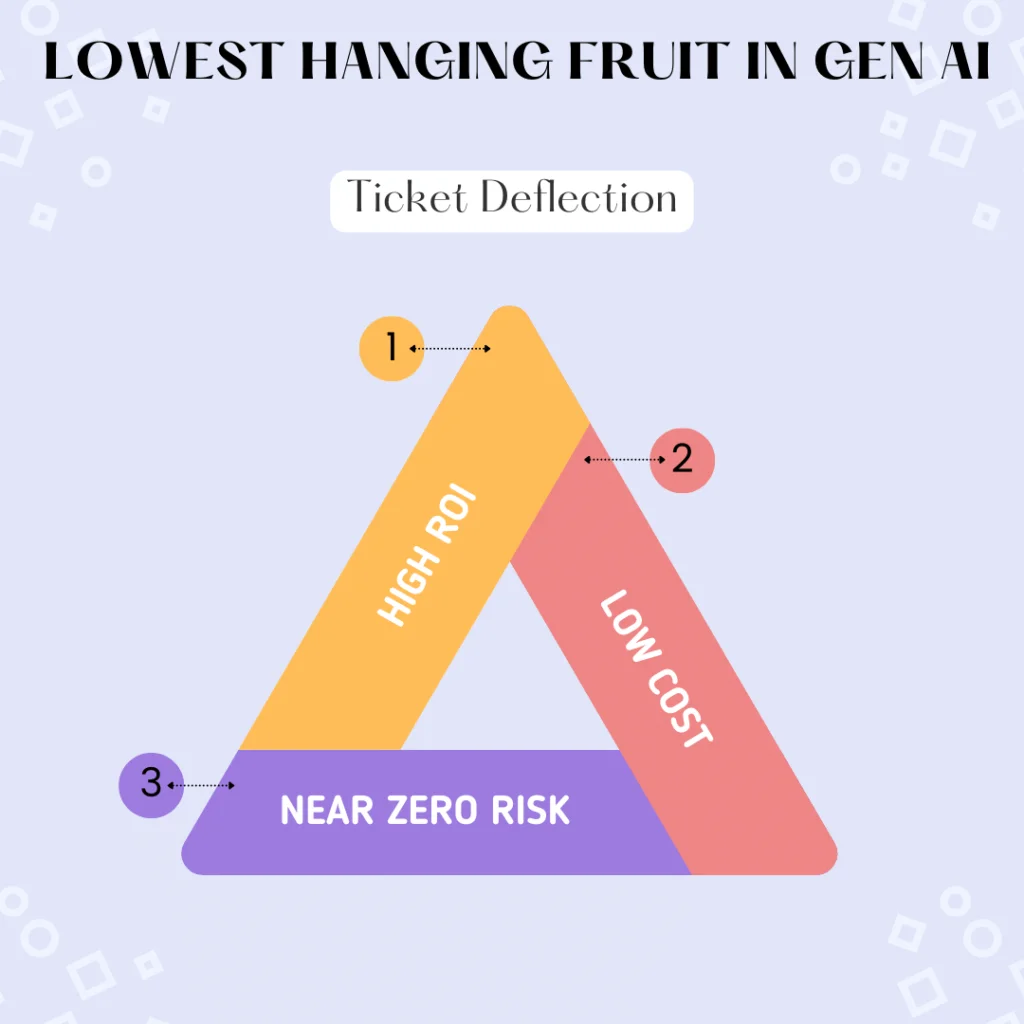

The Hidden Cost of Building AI In-House

Airbnb reportedly built its AI support system internally, relying on a complex stack of multiple models and custom orchestration. For a company of Airbnb’s scale, this approach is understandable. They have deep engineering talent, significant budgets, and unique operational requirements.

However, that path comes with trade-offs that are often underestimated:

- High upfront investment in model selection, infrastructure, and evaluation

- Ongoing maintenance as models, prompts, and policies require constant tuning

- Upgrade pressure every time a new generation of foundation models is released

- Operational complexity as systems grow harder to reason about and debug

What works for a hyperscale company does not automatically translate to mid-market or even large enterprise teams. For most businesses, building and maintaining an AI support stack becomes a permanent distraction rather than a one-time project.

Core Concepts: Deflection, Authority, and Control

In real deployments, deflection must be defined as issue resolution without ticket creation—not simply reduced inbound messages. Many teams now distinguish between superficial self-service and verified resolution, where a downstream action (refund, change, confirmation) actually occurs.

A second critical distinction is system role:

- AI agents: systems with authority to trigger workflows

- Copilots: read-only systems that assist humans

- Automations: deterministic workflows without reasoning

Impact depends less on linguistic quality and more on authority. Systems that cannot update state may sound helpful but rarely move resolution metrics.

Effective support architectures therefore operate as intent–policy–action loops, where intent routes the request, policy constrains what is allowed, and generation explains or executes within those bounds.

Image source: enjo.ai

Terminology Discipline Matters

Ambiguous language creates operational risk. Calling every system an “agent” leads stakeholders to expect actions the system is not authorized to perform. A useful heuristic is simple:

- Call it an agent only if it has system authority

- Call it a copilot if it is advisory and read-only

Clear naming improves security reviews, compliance approvals, and metric interpretation. It also prevents teams from blaming AI when the real issue is mismatched expectations.

Measuring What Actually Matters

Deflection rate, first-contact resolution (FCR), average handling time (AHT), CSAT, and NPS are often tracked independently. This hides trade-offs.

Aggressive automation can inflate deflection while depressing satisfaction if customers recontact through another channel. A more reliable approach conditions all metrics on verified resolution. Instead of asking “Was this session deflected?”, mature teams ask:

- Did this session end with a durable, policy-compliant outcome?

This requires session-level traceability: intent, sources used, policies applied, and downstream events joined back to the original interaction.

Business Value and Use Cases

The strongest business value from generative AI rarely comes from answering FAQs alone. It comes from redistributing human effort.

When routine requests are handled safely by AI, agents spend more time on high-risk, high-value journeys such as disputes, fraud, or complex account issues.

In parallel, internal copilots reduce preparation and handoff time on complex cases by drafting summaries, gathering context, and standardizing escalation notes.

This creates a second form of deflection: issues that never escalate because expert time is used more effectively upstream.

Enhancing Customer Experience with Generative AI

Customer experience improves most when AI preserves continuity across steps and channels. Users care less about any single answer than about whether the system “remembers” their situation. Effective systems maintain:

- A persistent session state

- Clear policy enforcement

- Retrieval limited to eligible sources

When these layers are explicit, incorrect actions drop even if the underlying model stays the same. A useful framing is: Experience quality = continuity × (precision + permission) Most failures occur when continuity breaks.

Core Architectural Pattern: Policy-First Retrieval

Across deployments, one pattern consistently separates safe automation from risk: policy-first retrieval, where routing logic, not the model, decides what the AI is allowed to know and do.

This matters because the same model can either be a safe deflection engine or a compliance risk, depending on how you structure the path from user utterance to data access.

Three components have to interlock: a high‑recall intent classifier, a policy engine that maps intent × user entitlement × channel to allowed indices and actions, and a retrieval layer that operates only inside that allowed slice.

There are two common architectures here. Embedding‑centric designs push everything through vector search, which works well for messy, unstructured content but often ignores entitlement boundaries.

Hybrid designs pair sparse retrieval (for schemas, SKUs, entitlements) with vectors (for explanations), trading a bit of latency for far higher precision on “can we do this?” questions.

Hybrid designs—combining structured lookups with semantic retrieval—trade slight latency for far higher safety and precision. “Most failures I see are not model issues; they’re policy leakage issues,”

— Lilian Weng, Head of Safety Systems, OpenAI

Data Sources and Integration

Most teams underestimate how opinionated their data needs to be: the hard part isn’t “connecting everything,” it’s deciding which source is allowed to win for a given intent and user. That choice drives deflection because conflicting answers destroy trust faster than a slow queue.

Treating them identically via a single vector index is tempting and usually wrong; you lose update guarantees, entitlements, and schema semantics that matter for refunds, SLAs, and risk actions.

A practical mental model is: Effective context = canonical source × freshness × intent alignment If any term fails, the answer degrades.

People, Process, and Change Management

Support AI rewires authority. Without explicit ownership, deflection plateaus. High-performing teams define:

- Clear owners for policies and runbooks

- Structured feedback loops for errors and edge cases

- Predictable release cadences for AI behavior changes

AI adoption fails when treated as a tool rollout instead of a governance redesign.

Risk, Control, and Observability

Most serious incidents stem from mis-scoped permissions, not model errors. Mature teams treat AI automation like a control system: exposure limits, escalation paths, and continuous monitoring.

Success is measured not by raw automation volume, but by policy-conformant resolution—AI decisions that remain uncorrected and within bounds.

FAQ

How is generative AI support different from traditional chatbots?

Traditional chatbots follow rules and keyword trees. Generative AI infers intent from the conversation, uses context (customer, product, plan, channel), and can maintain state across a journey—so it can handle more variation, as long as it’s bounded by retrieval and policy.

What reduces hallucinations in real deployments?

Clear governance and retrieval boundaries: define canonical sources per intent, enforce permissions, keep knowledge fresh, and use retrieval-first (or retrieval-only) patterns for sensitive topics. The goal is to prevent the model from “making up” missing details by limiting what it can see and say.

How should enterprises measure impact on deflection, FCR, and satisfaction?

Track outcomes at the session level: intent → policy version → sources used → final outcome (resolved, recontact, escalated). Then segment by customer tier, product, and issue type to identify where automation is safe and where it harms experience.

Why do CRM, help desk, and knowledge integrations matter?

They provide authoritative customer state and structured fields (plan, entitlement, region, ticket category) that improve routing and retrieval. Without integrations, AI falls back to generic answers and can contradict account-specific reality.

What works best for risk and compliance in regulated industries?

Use intent-level risk tiers. Low-risk intents can automate end-to-end; high-risk intents should be constrained (templates, retrieval-only) and routed through human approval. Monitoring should focus on policy conformance and reversals, not raw deflection.

The Strategic Takeaway

Generative AI for customer support is no longer about experimentation. It’s about sustainability. Companies now face a clear choice:

- Build and maintain increasingly complex AI systems, absorbing the cost and risk of constant change

- Adopt managed platforms that evolve with the AI landscape, allowing teams to focus on outcomes rather than infrastructure

As models continue to advance at an accelerating pace, the question is not whether AI will transform customer support—it already has. The real question is whether your organization wants to run the AI race or simply benefit from it.

For most teams, the smarter move is clear: focus on your core, and let specialists handle the rest.

Apply Generative AI in Customer Support.

Turn real deployment insights into scalable, cost-effective customer support outcomes.

Trusted by thousands of organizations worldwide