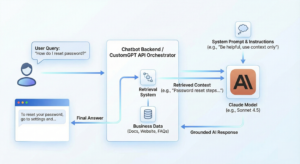

You use Claude in a chatbot by choosing a suitable Claude model, wiring it into your stack (API, cloud platform, or no-code builder), designing prompts and safety rules, and optionally combining it with a RAG layer like CustomGPT.ai so Claude can answer from your own docs and data.

TL;DR

Building a reliable AI support bot with Claude? This guide (updated January 2026) breaks down the technical path: from choosing the right model (Sonnet 4.5 for balance vs. Haiku for speed) to wiring up the API or AWS Bedrock and managing context windows. If you want to skip the coding and retrieval headaches, CustomGPT.ai lets you train trusted, hallucination-free agents on your own business data and deploy them as website widgets or MCP tools in minutes. No code required.

Scope:

Last updated: January 2026. Applies globally; align data collection and consent with local privacy laws such as GDPR in the EU and CCPA/CPRA in California.

Choosing a Claude model for your chatbot

Claude is a family of models with different trade-offs in intelligence, speed, and cost. Anthropic’s docs currently recommend Claude Sonnet 4.5 as the default for most use cases because it balances capability and latency well.

For a typical support/FAQ chatbot:

- Start with Sonnet 4.5 for production traffic.

- Use Haiku–class models for very high-volume, simple Q&A where speed and cost matter most.

- Reserve Opus/Opus-class models for complex reasoning, deep troubleshooting, or high-stakes workflows that need maximum accuracy and chain-of-thought style planning.

Whichever you pick, try it in your real flows and measure:

- Response time

- Cost per 1,000 conversations

- Resolution rate / “did this answer your question?” feedback

Using Claude in a custom-coded chatbot via the API

This path is ideal if you control the backend (Node, Python, etc.) and want full flexibility.

Steps

- Get API access and keys

Sign up for a Claude account and obtain an API key from the console. The Claude API is a standard HTTPS API you call from your server code. - Call the Messages API

Use the Messages API endpoint, which takes a list of messages (role + content) and returns the next assistant message. This is the primary API for chatbots. - Structure conversation turns

Send messages as an array like: system (rules), user (what the user typed), and any previous turns you want to resend. Keep history concise to stay within context limits. - Enable streaming for responsive UX

Use the streaming option so your UI can display Claude’s response token-by-token, making the bot feel more real-time and reducing perceived latency. - Implement error handling and timeouts

Handle network errors, invalid parameters, and timeouts gracefully. Show a friendly fallback message and optionally log the error for debugging. - Log prompts and responses (with care)

Store enough metadata to debug issues and improve prompts later, but avoid logging sensitive user data unless you have a clear policy and consent.

Using Claude in chatbots on cloud AI platforms

If your stack already runs on a cloud provider like AWS, it can be convenient to use Claude via that platform.

Steps (example: Amazon Bedrock)

- Enable Claude models in Bedrock

In your AWS account, enable access to Anthropic Claude models in Amazon Bedrock. - Use the Claude Messages API variant

AWS provides a Claude Messages API surface where you send messages, max_tokens, and related parameters to generate chatbot responses. - Choose the model in Bedrock settings

Select the desired Claude model (for example, a Sonnet or Haiku variant) in your Bedrock client or SDK configuration. - Wire into your backend

Replace your previous LLM calls with Bedrock’s Claude endpoint. Map user messages to messages payloads and forward Claude’s output to your chatbot UI. - Tune parameters per use case

Adjust temperature (creativity), maximum tokens, and stop sequences to match your UX. Use lower temperature for factual support bots, higher for creative assistants. - Monitor and scale

Use your cloud’s monitoring and logging tools to track latency, errors, and usage, then autoscale as traffic grows.

Using Claude in no-code or low-code chatbot builders

Many chatbot builders now offer “bring your own LLM” or direct Claude connectors.

Typical pattern

- Create or open a bot flow

In your builder, create a chatbot project and locate the “AI”, “LLM”, or “Custom model” step. - Select Claude or custom LLM

If Claude is built-in, choose it and paste your API key or connect via OAuth. If not, use the builder’s generic HTTP/LLM block to call the Claude Messages API. - Map user input to Claude

Configure the block so the user’s last message (plus any important variables like user ID or language) is sent as the user content. - Add system instructions in the flow

Many builders let you add “System” or “Instruction” text. Use this to define your bot’s role, tone, and boundaries. - Capture Claude’s reply into variables

Map the model’s reply into a variable like ai_response, then use it in your message node shown to the user. - Test with realistic conversations

Run through real support or sales chats. Tweak prompts, parameters, and routing logic until results are stable.

The Economics of Claude: API Costs Simplified

Switching to Claude means paying for “Cognitive Labor” via tokens. Understanding the “Input vs. Output” dynamic is key to predicting your ROI.

- The “Read vs. Write” Rule

Like most models, Claude bills based on volume, but the price depends on the direction:

- Input (Reading) is Cheap: Sending your documents and instructions to the model costs very little.

- Output (Writing) is Premium: The text Claude generates costs significantly more (often 5x–10x the input price).

- The CustomGPT Benefit: Our RAG architecture saves you money by surgically extracting only the relevant snippets for the model to read, rather than dumping entire files into the conversation.

- Choose Your Intelligence Tier

Anthropic offers three tiers. In 2026, the choice usually comes down to Speed vs. Nuance:

| Feature | Claude Sonnet 4.5 | Claude Haiku 4.5 | Claude Opus 4.5 |

| Best For | Complex agents, coding, support | Speed, routing, simple tasks | Maximum intelligence, reasoning |

| When to Use | Your go-to for most tasks; balances smarts and cost perfectly. | High-volume, simple queries where speed and budget are top priority. | Complex problems needing deep reasoning, regardless of cost. |

| Speed | Fast | Fastest | Moderate |

| Input Cost | $3.00 / million tokens | $1.00 / million tokens | $5.00 / million tokens |

| Output Cost | $15.00 / million tokens | $5.00 / million tokens | $25.00 / million tokens |

| Context Window | 200K (1M beta available) | 200K | 200K |

| Knowledge Cutoff | Jan 2025 | Feb 2025 | May 2025 |

Which Integration Method fits your Team?

| Integration Method | Tech Skill Required | Setup Speed | Key Benefit | Best For |

| CustomGPT.ai + MCP | Low / No-Code | Fastest (Minutes) | Zero Hallucinations (RAG is built-in) | Support teams needing accurate answers from their own documents immediately. |

| No-Code Builder | Low | Fast (Hours) | Visual Flow | Marketing/Sales teams creating simple, linear conversation flows. |

| Direct API (Node/Python) | High | Slow (Days/Weeks) | Max Flexibility | Dev teams building a highly custom UI or complex backend logic. |

| Cloud Platform (AWS Bedrock) | Medium / High | Medium | Security & Compliance | Enterprise teams already working within the AWS ecosystem. |

How to do it with CustomGPT.ai

Here’s how to make Claude use your own knowledge via a CustomGPT.ai agent, and/or deploy CustomGPT as the chatbot that runs on Claude-powered models behind the scenes.

Build a knowledge-backed agent in CustomGPT.ai

- Create your CustomGPT.ai account and agent

Follow the “Welcome” and “Create agent” flow to set up an AI agent based on your business content. - Connect your data sources

Use Manage AI agent data to add websites, sitemaps, files, and other sources so the agent can answer from your docs, help center, and knowledge base. - Fine-tune behavior and prompts

In the agent settings, configure persona, tone, and behavior so the answers sound like your brand and respect your policies.

Deploy the agent as a chatbot

- Embed it as a website/chat widget

Use the Embed AI agent into any website or Add live chat to any website guides to drop a floating chat widget, embedded iframe, or “copilot” button into your site or help desk. - Expose it via the CustomGPT API

Follow the API quickstart guide to call your agent over HTTPS from any custom UI. Your own frontend becomes the chatbot, CustomGPT handles retrieval over your data.

Let Claude talk to your CustomGPT.ai agent

- Deploy an MCP server for your agent

Use the Deploy using MCP server guide to host or deploy the open-source customgpt-mcp server connected to your CustomGPT API key. - Connect MCP to Claude Web/Desktop

Follow Deploy to Claude Web (and Desktop) docs to add your MCP endpoint and token in Claude’s settings. Claude can now call your CustomGPT tools as a connector. - Use Claude as the front-end chatbot

In Claude’s chat, talk to your MCP-backed tool (your CustomGPT agent). Claude remains the conversational interface; CustomGPT.ai provides grounded answers from your content.

Designing prompts, context, and memory for Claude chatbots

Good prompt and context design is the difference between a “smart” chatbot and a chaotic one.

- Write a clear system prompt

Use Anthropic’s prompt-engineering guidance: specify role, audience, style, and what to do when unsure (ask clarifying questions or admit uncertainty). - Use examples (few-shot prompts)

Include a few example Q&As that show the kind of answers you want, especially for tricky edge cases. - Separate rules from user input

Keep stable instructions in a dedicated system prompt, and treat user messages as data, not something allowed to override rules. - Manage conversation history

Only send relevant turns back to Claude to stay within context limits and avoid confusing the model. - Combine with retrieval

If you’re using CustomGPT or another RAG layer, pass retrieved snippets in a structured way (e.g., “Context” section) so Claude knows what information is supporting its answer.

Handling safety, abuse, and guardrails in Claude-powered chatbots

Claude has built-in safety behaviors aimed at reducing harmful, hateful, or policy-violating outputs, and Anthropic publishes detailed safeguards guidance.

To keep your chatbot safe:

- Align your system prompt with Claude’s policies

Explicitly forbid disallowed behavior (e.g., hate, self-harm instructions, harassment) and tell the bot to refuse those requests. - Implement input and output filters

Before sending user content to Claude, optionally block obviously malicious or disallowed inputs. After getting a response, you can run additional checks. - Handle abusive users gracefully

Detect repeated abuse and respond with a neutral “cannot help with that” message or gently end the conversation. - Log safety-relevant events

Record attempted policy violations, both to improve your guardrails and to comply with internal risk processes. - Respect Anthropic’s usage policy

Make sure your use case complies with Anthropic’s Usage Policy and any guidance about agentic features.

While other models race for speed, Claude excels at nuance. If you are building a chatbot for sensitive Customer Support or internal HR policy, you don’t just need an answer; you need the right tone. CustomGPT.ai lets you swap the underlying model to Claude 4.5 instantly to capture that ‘human touch’ without rewriting a line of code.

Example: customer support FAQ chatbot with Claude

Here’s a simple blueprint you can adapt.

Scenario

You run a SaaS app and want a chatbot on your docs site that:

- Answers FAQs accurately

- Escalates to human support when needed

- Optionally uses CustomGPT.ai + MCP so Claude can reference your full knowledge base

Implementation steps

- Prepare your knowledge

- Index your docs and FAQ into CustomGPT.ai as an agent using “Manage AI agent data”.

- Create the chat backend

- Either:

- Call Claude’s Messages API directly from your server and plug in your own retrieval, or

- Use CustomGPT’s API to query your agent, then optionally send a condensed answer + sources to Claude for final wording.

- Either:

- Design the system prompt

- “You are a helpful support assistant for . Always answer using the provided knowledge. If unsure or the question is out of scope, say you don’t know and offer to contact support.”

- Build the UI

- Embed CustomGPT’s live chat widget on your docs site, or build a small JS chat box hitting your backend or CustomGPT API.

- Add Claude + MCP front-end (optional)

- Deploy a CustomGPT MCP server and connect it to Claude Web so you (or your team) can test support conversations directly inside Claude, powered by your CustomGPT agent.

- Test and iterate

- Run real support questions through the bot, inspect logs, tweak prompts, and adjust data sources until users consistently get correct, concise answers.

Conclusion

The challenge isn’t just using Claude; it’s making it safe and reliable for your business. CustomGPT.ai solves this instantly. By connecting Claude to your secure documents, we ensure every answer is accurate and hallucination-free, no complex coding required. Don’t waste time building backend infrastructure from scratch. Give your customers the fast, factual support they deserve with a bot that actually knows your products. Create your custom Claude agent today.

FAQ’s

How do I connect Claude to my existing customer support chatbot?

You connect Claude to a support chatbot by calling the Claude Messages API from your backend or via a cloud AI platform like Amazon Bedrock, then mapping user messages into the messages payload and streaming responses back to your UI. You define a clear system prompt for support behavior, manage conversation history, and add error handling and logging so the bot stays reliable in production.

Can I use Claude in my chatbot with my own docs and knowledge base?

Yes, combine Claude with a retrieval layer like CustomGPT.ai so it can answer from your docs, FAQs, and help center. You create a CustomGPT.ai agent, add your websites and files as data sources, and expose it via API, live chat, or MCP. Claude Web/Desktop can then call that agent as a connector, giving you grounded, doc-aware responses inside a Claude-powered chat.