For nearly three years, our engineering team chased one goal: sub-second performance. Every optimization, every system tweak, every millisecond we could shave off was a step toward making AI interaction feel instant.

Today, that mission is complete.

We’re thrilled to announce that CustomGPT.ai has achieved sub-second query speed — and with it, a new milestone for enterprise-grade AI.

The Breakthrough: Instant Answers, Every Time

Powered by OpenAI’s Priority Processing — now including the newly upgraded GPT-4o-mini Priority interpretation and classification services — and our custom speed-optimized infrastructure, CustomGPT.ai agents now respond faster than you can blink.

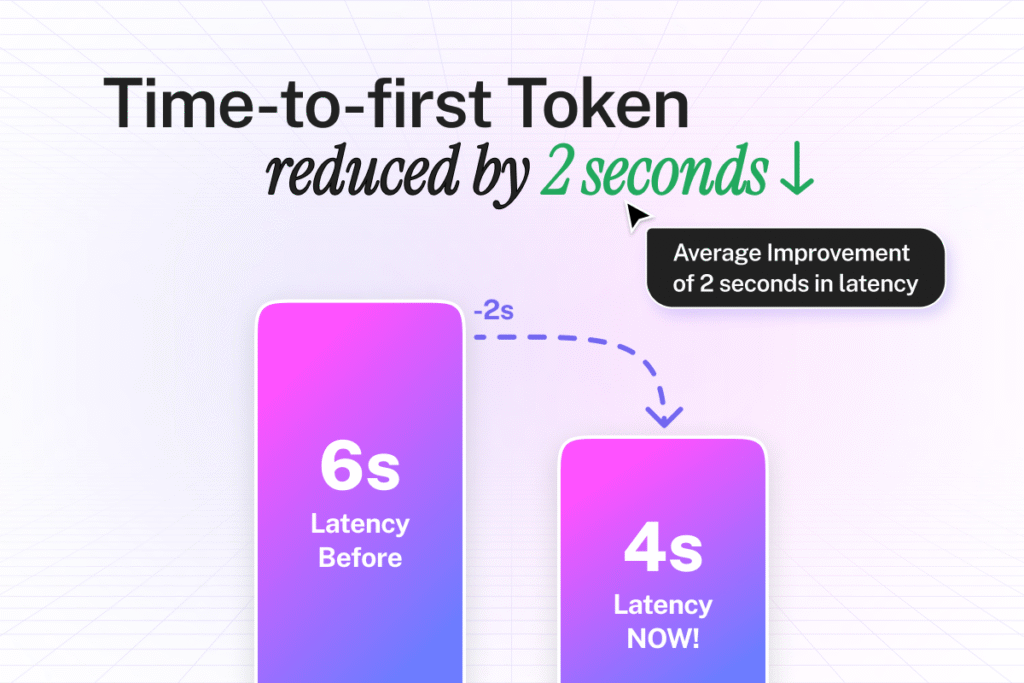

These improvements deliver up to 2 seconds of additional latency gain across the entire system.

Best of all: this upgrade is automatically enabled for all accounts (except those running Azure).

Sub-second performance is now available to everyone using our speed-optimized mode.

And for Enterprise customers, it gets even better.

Introducing Priority Queries — automatic priority processing for every single query you run. Not limited to models or configurations, every request you send now jumps to the front of the line.

The result?

- Time-to-first-token drops from 6 seconds to 4 seconds.

- Average latency improves by up to 2 seconds across all interactions.

- Background classification and query interpretation now run on GPT-4o-mini Priority, increasing throughput across all agents

- Fastest Responses are now virtually instant

- Numeric Search is now optimized so that queries without alphanumeric codes incur zero added latency

No setup required. No additional cost. It’s already live in your account.

Why Speed Matters: Every Millisecond Is Money

In AI, every millisecond matters and numbers don’t lie.

According to Google’s research, each extra second of delay leads to exponentially higher abandonment rates. When your AI hesitates, prospects leave, support tickets escalate, employees give up, and conversion rates tank.

When it responds instantly, everything changes.

Employees find critical information without breaking their workflow.

- Conversations flow naturally.

- Sales teams close deals in real time.

- Support resolves complex issues in single interactions.

Speed isn’t a nice-to-have — it’s the difference between AI that accelerates your business and AI that frustrates your users.

Built for Teams That Move Fast

Enterprise means teams — and teams need tools that work for everyone, from the CEO to the newest support agent.

CustomGPT.ai for Teams gives organizations the control, flexibility, and speed they need to scale:

- Add seats as you grow — from 5 to 500 and beyond.

- Chat-only roles for front-line reps without exposing backend configurations.

- Per-agent access controls so each department gets its own tailored agent.

- Custom SSO integration for seamless, secure authentication.

With Priority Queries active across every seat, your entire team now runs at maximum velocity — no lag, no waiting, no friction.

Bring All Your Data Into One Intelligent System

Your knowledge lives across SharePoint, Confluence, Google Drive, Zendesk, and Notion. Enterprise plans include auto-sync for all of them — connect once, and your agent stays up to date with every edit, ticket, and update.

PDFs With Proof

Enterprise customers get the PDF Viewer with Smart Highlighting. Your agent cites a PDF, the user clicks it, and sees the exact text highlighted in the actual document. No hunting through pages. No control-F searching.

The relevant section is right there, highlighted, in context.

When your AI cites a source, users can see the exact section highlighted in the document itself — building trust and cutting support requests.

Total Control Over Your AI’s Brain

Every business has unique needs. Enterprise gives you the controls to tune your agent exactly how you need it:

- AI Model Selector – Switch between GPT-4.1, GPT-4o, Claude Opus, Claude Sonnet, and more.

- Extended Context Windows – Handle longer conversations and more complex queries without losing the thread.

- Extended History Windows – Your agent remembers more of the conversation, making multi-turn interactions feel natural.

- Extended Persona Length – Build detailed, nuanced personas that truly represent your brand voice and expertise.

- Extended End-User Prompt Length – Let users ask complex, detailed questions without hitting character limits.

And all of it runs on Priority Queries for instant responses no matter how complex your configuration.

Scale Without Limits

Modern enterprises need systems that grow as fast as they do. CustomGPT.ai’s Enterprise infrastructure scales effortlessly:

Enterprise plans scale with you:

- Uncapped queries – Metered but never blocked. Your AI never goes down during a traffic spike.

- Unlimited agents – One agent per product line? Ten agents for different departments? Build as many as you need.

- Massive storage limits – Extend word storage and document storage to handle your entire knowledge base.

- Increased processing capacity – Process millions of words per month. Ingest thousands of documents. Upload gigabytes of images.

- Custom API rate limits – Need 500 requests per minute? 1,000? We’ll configure it for your workload.

Your business grows. Your AI grows with it.

Enterprise Means Trust

Speed and scale mean nothing without reliability. That’s why our Enterprise offering includes:

- Full DPA coverage for compliance and security.

- Azure as AI Model Provider for customers who need a controlled environment. (Note: Priority Queries will be available for Azure customers once Microsoft adds Priority processing support. Azure customers can switch to OpenAI for immediate access.)

- Unlimited analytics history — because long-term insight matters.

- Dedicated support channels that truly prioritize your business.

Join the Community

See how other Enterprise customers are using Priority Queries and advanced features.

Join the CustomGPT.ai Slack Community to connect with teams pushing the boundaries of AI agents.

Frequently Asked Questions

Why Go Enterprise?

The enterprise plan offers enhanced customization, integration capabilities, dedicated support, and advanced security features. It’s tailored for organizations that need robust, scalable AI solutions with access to more resources and personalized configurations.

What are Priority Queries and how do they work?

Priority Queries use OpenAI’s Priority processing infrastructure to dramatically accelerate AI response times. When you ask a question, your request jumps to the front of the processing queue instead of waiting in line. Dedicated resources handle your request immediately. Time-to-first-token drops from 6 seconds to 4 seconds and average latency improves by 2 seconds across all interactions. Priority Queries work automatically for Enterprise customers using supported OpenAI models with zero configuration required.

Who can access Priority Queries right now?

All Enterprise plan customers using OpenAI as their model provider get Priority Queries automatically. Premium plan customers also access Priority Queries when using the Fastest Response agent capability. Azure OpenAI customers cannot use Priority Queries yet because Microsoft hasn’t added Priority processing support to Azure infrastructure. Azure customers can switch to OpenAI as their provider for immediate access.

Do Priority Queries cost anything extra?

No. Priority Queries are included at zero additional cost for Enterprise customers. OpenAI charges premium fees for Priority processing but CustomGPT.ai absorbs all those fees. You get enterprise-grade speed without surprise charges, tier upgrades, or per-query fees. We believe speed should be standard, not premium.

What setup or configuration do Priority Queries require?

None. Priority Queries activate automatically when you use supported OpenAI models. There’s no settings toggle to flip, no API parameters to change, and no deployment modifications to make. If you’re an Enterprise customer using GPT-4o, GPT-4-mini, or other supported models, Priority Queries is already working across all your deployments.

Which AI models support Priority Queries?

Priority Queries work with GPT-4.1, GPT-4o, GPT-4-mini, and GPT-4o-mini. These are OpenAI models only. Claude models and other non-OpenAI providers don’t support Priority processing because this is OpenAI-specific infrastructure. We continuously evaluate performance features from all providers and will announce additional speed optimizations as they become available.

How much faster are responses with Priority Queries?

Priority Queries deliver an average 2-second improvement in response times across all interactions. Time-to-first-token drops from 6 seconds to 4 seconds. When you combine Priority Queries with Fastest Response mode (available on Premium and Enterprise plans), you get sub-second time-to-first-word-streamed performance. This is our fastest possible configuration and feels instantaneous to users.

What makes Priority Queries different from Fastest Response mode?

Fastest Response mode is an agent capability that selects speed-optimized models (GPT-4-mini and GPT-4o-mini) and applies aggressive caching. Priority Queries are a processing tier that accelerates any supported OpenAI model by jumping the queue. They work differently and complement each other. When combined, Fastest Response plus Priority Queries delivers sub-second performance. You can use Priority Queries with any supported model, not just the speed-optimized ones.

Do Priority Queries work for API integrations and embedded widgets?

Yes. Priority Queries work seamlessly across all deployment methods including embedded website widgets, API integrations, live chat deployments, Slack channels, Microsoft Teams integrations, Chrome extensions, and mobile applications. No code changes or parameter adjustments are required. Once activated for your account, Priority Queries accelerate responses everywhere you’ve deployed CustomGPT.ai.

Can Azure OpenAI customers use Priority Queries?

Not yet. Priority Queries require OpenAI’s Priority processing infrastructure which Microsoft hasn’t added to Azure OpenAI. We’ll enable Priority Queries for Azure customers automatically the moment Microsoft adds this capability. If you need Priority Queries now, you can switch from Azure OpenAI to OpenAI as your provider. This switch gives you immediate access to Priority processing.

Why does AI response speed matter so much for business?

Research from Google shows that every additional second of wait time increases user abandonment rates exponentially. When your AI makes users wait 5-6 seconds for responses, prospects move to faster competitors, support tickets escalate because customers lose patience, and employees stop using AI tools that can’t keep pace with their workflow. Fast responses keep users engaged, improve conversion rates, increase customer satisfaction, and drive higher adoption. Speed isn’t optional anymore. It’s the price of entry.

How does Pricing work?

Pricing depends on factors such as usage volume, integration complexity, and the level of support and deployment required. Enterprise plans are customizable to match the scale and needs of your organization. These questions will be covered during a joint evaluation process with a member of our team.

What kind of Support is available for enterprise customers?

Enterprise customers have access to dedicated support, including onboarding assistance, technical support, and ongoing AI optimization services. There are also resources like documentation, case studies, and live demos available.

Build a Custom GPT for your business, in minutes.

Drive revenue, save time, and delight customers with powerful, custom AI agents.

Trusted by thousands of organizations worldwide